this post was submitted on 08 Sep 2023

1254 points (97.9% liked)

linuxmemes

21272 readers

502 users here now

Hint: :q!

Sister communities:

- LemmyMemes: Memes

- LemmyShitpost: Anything and everything goes.

- RISA: Star Trek memes and shitposts

Community rules (click to expand)

1. Follow the site-wide rules

- Instance-wide TOS: https://legal.lemmy.world/tos/

- Lemmy code of conduct: https://join-lemmy.org/docs/code_of_conduct.html

2. Be civil

- Understand the difference between a joke and an insult.

- Do not harrass or attack members of the community for any reason.

- Leave remarks of "peasantry" to the PCMR community. If you dislike an OS/service/application, attack the thing you dislike, not the individuals who use it. Some people may not have a choice.

- Bigotry will not be tolerated.

- These rules are somewhat loosened when the subject is a public figure. Still, do not attack their person or incite harrassment.

3. Post Linux-related content

- Including Unix and BSD.

- Non-Linux content is acceptable as long as it makes a reference to Linux. For example, the poorly made mockery of

sudoin Windows. - No porn. Even if you watch it on a Linux machine.

4. No recent reposts

- Everybody uses Arch btw, can't quit Vim, and wants to interject for a moment. You can stop now.

Please report posts and comments that break these rules!

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

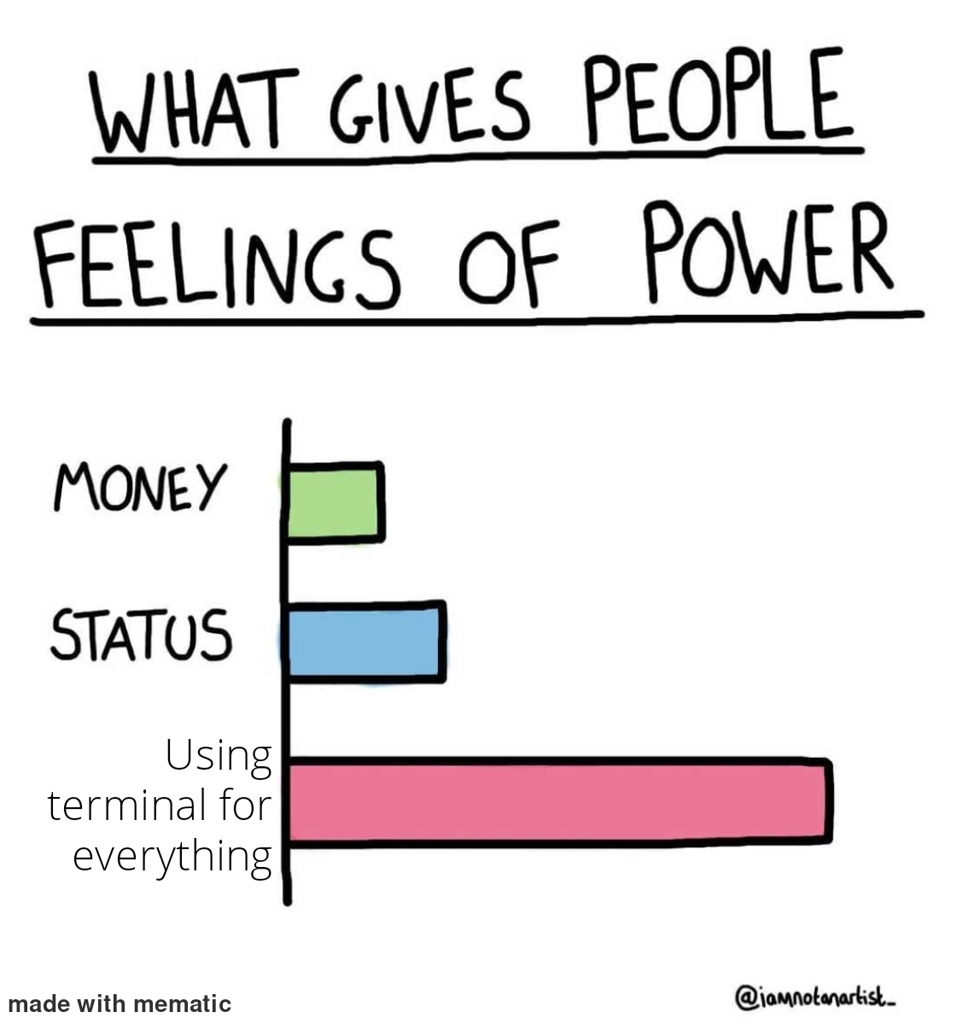

A dirty linux admin here. Imagine you get ssh'd in nginx log folder and all you want to know are all the ips that have been beating againts certain URL in around last let's say last seven days and getting

429most frequent first. In kittie script its likefind -mtime -7 -name "*access*" -exec zgrep $some_damed_url {} \; | grep 429 | awk '{print $3}' | sort | uniq -c | sort -r | lessdepends on how y'r logs look (and I assume you've been managing them - that's where thezgrepcomes from) should be run intmuxand could (should?) be written better 'n all - but my point is - do that for me in gui(I'm waiting ⏲)

As a general rule, I will have most of my app and system logs sent to a central log aggregation server. Splunk, log entries, even cloudwatch can do this now.

But you are right, if there is an archaic server that you need to analyse logs from, nothing beats a find/grep/sed