this post was submitted on 18 Jul 2023

532 points (97.7% liked)

Memes

45670 readers

1419 users here now

Rules:

- Be civil and nice.

- Try not to excessively repost, as a rule of thumb, wait at least 2 months to do it if you have to.

founded 5 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

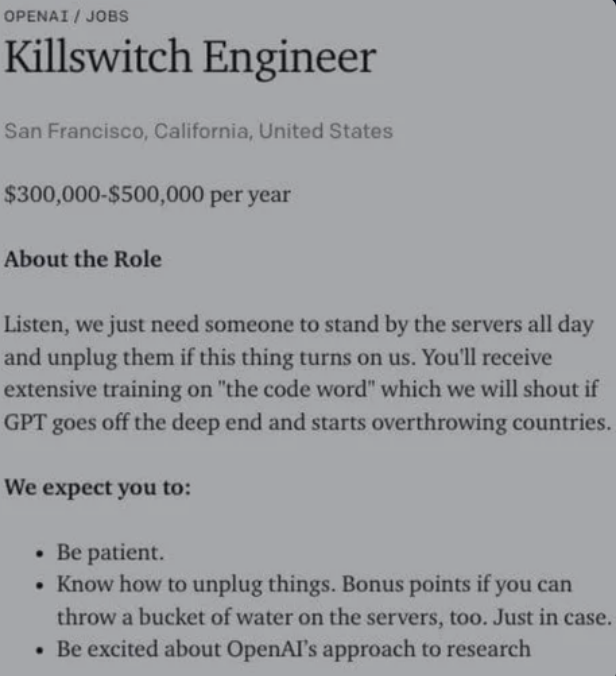

You get a job here and next thing you know, AI is targetting you.

The serious question is with what? I doubt the AI is going to kill you with disgusting pics or existential philosophy.

This means they hooked it up to something that might be used as a weapon of attack: an industial printer or a t-shirt cannon or a gunship at port.

We live in digital world now. No need to actually, physically harm someone. Or maybe the AI will file a fake complaint against someone and cops will take care that individual.

Actually the possibility of social engineering SWAT attacks on targets is a valid point. I noted some years ago that there are hospital devices that are now connected to the internet when they are in active use (such as those devices that administer medications intravenously based on timing and user input, and while such a set up could kill a patient by reprogramming the module, we've not yet an attack affect one yet.