this post was submitted on 17 Dec 2024

52 points (96.4% liked)

Technology

59989 readers

2743 users here now

This is a most excellent place for technology news and articles.

Our Rules

- Follow the lemmy.world rules.

- Only tech related content.

- Be excellent to each another!

- Mod approved content bots can post up to 10 articles per day.

- Threads asking for personal tech support may be deleted.

- Politics threads may be removed.

- No memes allowed as posts, OK to post as comments.

- Only approved bots from the list below, to ask if your bot can be added please contact us.

- Check for duplicates before posting, duplicates may be removed

Approved Bots

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

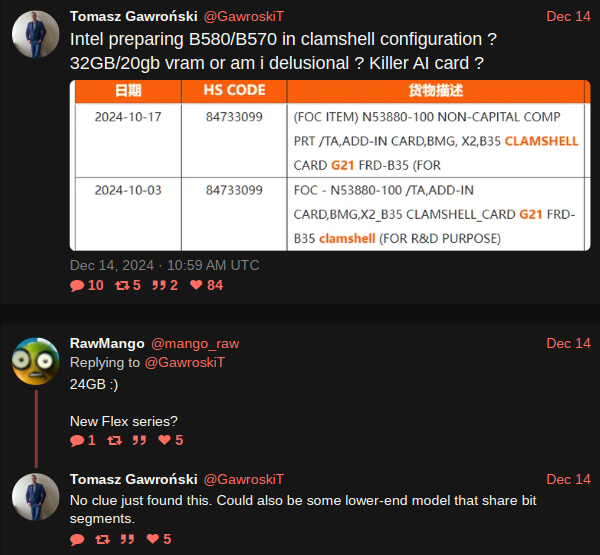

They would be incredible, as long as they're cheap. Intel would be utterly stupid for not doing this.

OpenAI does not make hardware. Also, their model progress has stagnated, already matched or surpassed by Google, Claude, and even some open source chinese models trained on far fewer GPUs... OpenAI is circling the drain, forget them.

The only "real" competitor to Nvidia, IMO, is Cerebras, which has a decent shot due to a silicon strategy Nvidia simply does not have.

The AMD MI300X is actually already "better" than Nvidia's best... but they just can't stop shooting themselves in the foot, as AMD does. Google TPUs are good, but google only, like Amazon's hardware. I am not impressed with Groq or Tenstorrent.

Yeah, I didn't mean to imply that either. I meant to write OneAPI. :D

It's just that I'm afraid Nvidia get the same point as raspberry pies where even if there's better hardware out there people still buy raspberry pies due to available software and hardware accessories. Which loops back to new software and hardware being aimed at raspberry pies due to the larger market share. And then it loops.

Now if someone gets a CUDA competitor going that runs equally well on Nvidia, AMD and Intel GPUs and becomes efficient and fast enough to break that kind of self-strengthening loop before it's too late then I don't care if it's AMDs ROCm or Intels OneAPI. I just hope it happens before it's too late.

They’re kinda already there :(. Maybe even worse than raspberry pies.

Intel has all but said they’re exiting the training/research market.

AMD has great hardware, but the MI300X is not gaining traction due to a lack of “grassroots” software support, and they were too stupid to undercut Nvidia and sell high vram 7900s to devs, or to even prioritize its support in rocm. Same with their APUs. For all the marketing, they just didn’t prioritize getting them runnable with LLM software