this post was submitted on 03 Jan 2024

491 points (100.0% liked)

196

16453 readers

1835 users here now

Be sure to follow the rule before you head out.

Rule: You must post before you leave.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

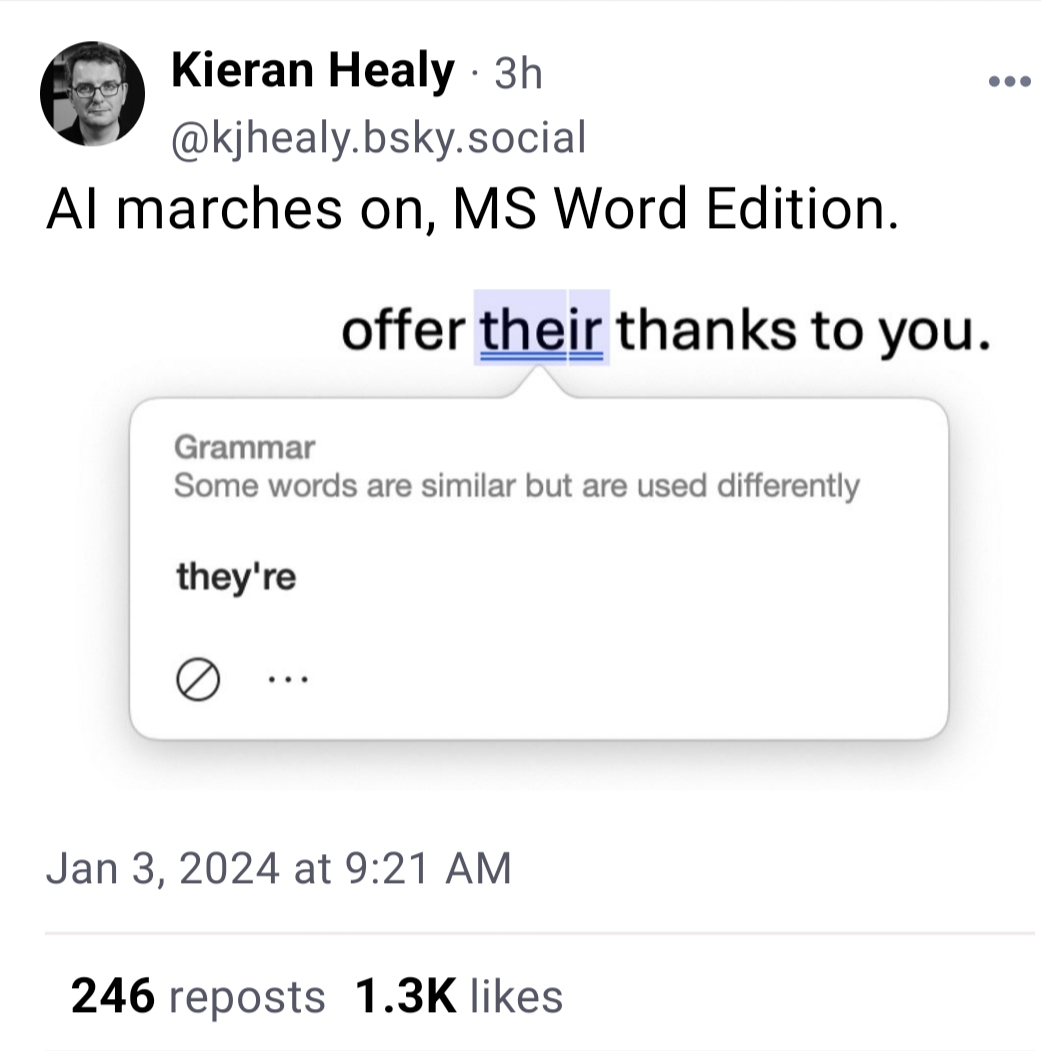

I've seen this with gpt4. If I ask it to proofread text with errors it consistently does a great job, but if I prompt it to proofread a text without errors, it hallucinates them. It's funny to see Microsoft having the same issue.

I'm pretty sure MS uses GPT-4 as the foundation of all their AI stuff, so it's not surprising to see them have the same issues. Funny, as you said, but not surprising.