this post was submitted on 06 May 2024

2510 points (99.3% liked)

Science Memes

12359 readers

3227 users here now

Welcome to c/science_memes @ Mander.xyz!

A place for majestic STEMLORD peacocking, as well as memes about the realities of working in a lab.

Rules

- Don't throw mud. Behave like an intellectual and remember the human.

- Keep it rooted (on topic).

- No spam.

- Infographics welcome, get schooled.

This is a science community. We use the Dawkins definition of meme.

Research Committee

Other Mander Communities

Science and Research

Biology and Life Sciences

- !abiogenesis@mander.xyz

- !animal-behavior@mander.xyz

- !anthropology@mander.xyz

- !arachnology@mander.xyz

- !balconygardening@slrpnk.net

- !biodiversity@mander.xyz

- !biology@mander.xyz

- !biophysics@mander.xyz

- !botany@mander.xyz

- !ecology@mander.xyz

- !entomology@mander.xyz

- !fermentation@mander.xyz

- !herpetology@mander.xyz

- !houseplants@mander.xyz

- !medicine@mander.xyz

- !microscopy@mander.xyz

- !mycology@mander.xyz

- !nudibranchs@mander.xyz

- !nutrition@mander.xyz

- !palaeoecology@mander.xyz

- !palaeontology@mander.xyz

- !photosynthesis@mander.xyz

- !plantid@mander.xyz

- !plants@mander.xyz

- !reptiles and amphibians@mander.xyz

Physical Sciences

- !astronomy@mander.xyz

- !chemistry@mander.xyz

- !earthscience@mander.xyz

- !geography@mander.xyz

- !geospatial@mander.xyz

- !nuclear@mander.xyz

- !physics@mander.xyz

- !quantum-computing@mander.xyz

- !spectroscopy@mander.xyz

Humanities and Social Sciences

Practical and Applied Sciences

- !exercise-and sports-science@mander.xyz

- !gardening@mander.xyz

- !self sufficiency@mander.xyz

- !soilscience@slrpnk.net

- !terrariums@mander.xyz

- !timelapse@mander.xyz

Memes

Miscellaneous

founded 2 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

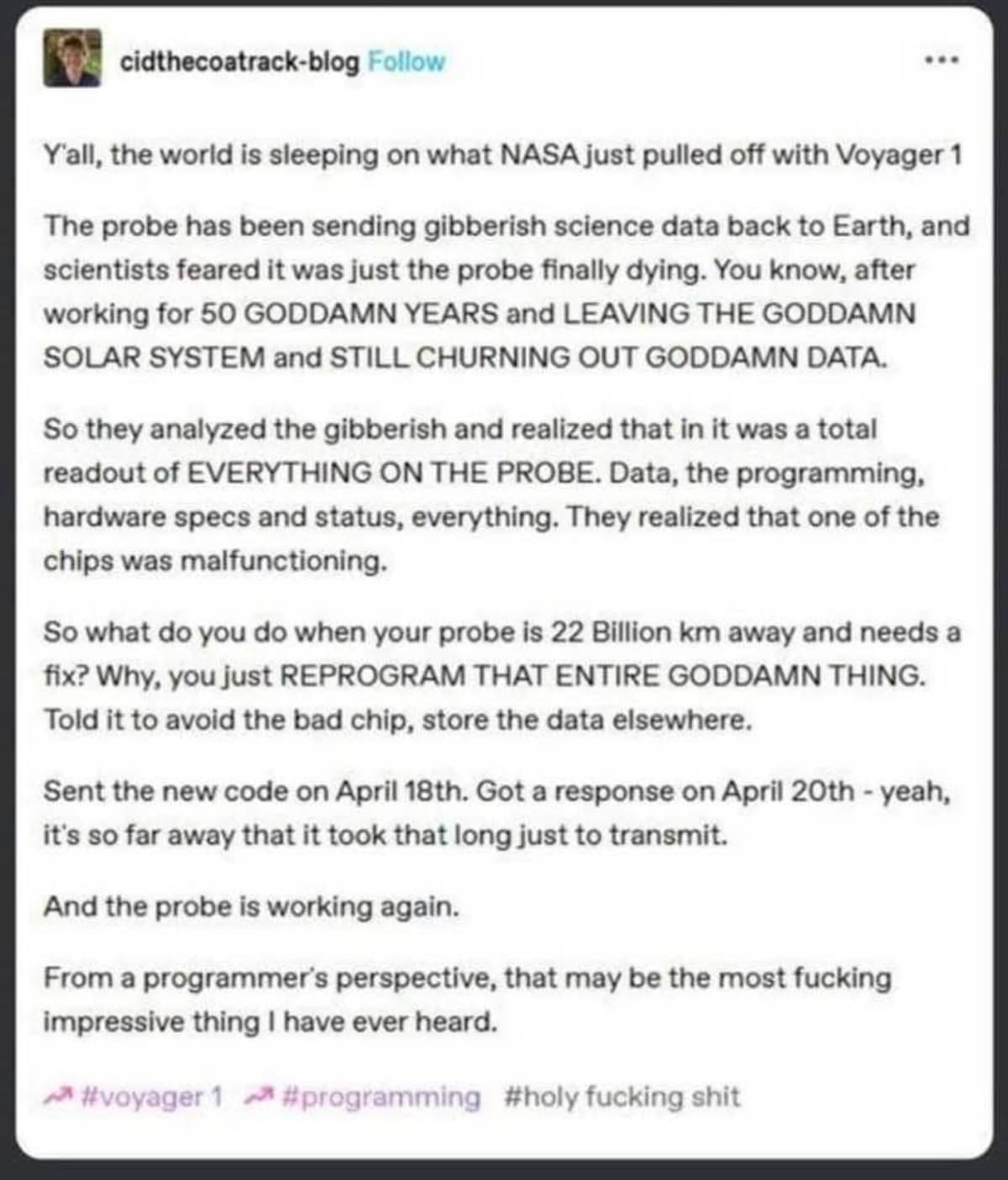

To me, the physics of the situation makes this all the more impressive.

Voyager has a 23 watt radio. That's about 10x as much power as a cell phone's radio, but it's still small. Voyager is so far away it takes 22.5 hours for the signal to get to earth traveling at light speed. This is a radio beam, not a laser, but it's extraordinarily tight beam for a radio, with the focus only 0.5 degrees wide, but that means it's still 1000x wider than the earth when it arrives. It's being received by some of the biggest antennas ever made, but they're still only 70m wide, so each one only receives a tiny fraction of the power the power transmitted. So, they're decoding a signal that's 10^-18 watts.

So, not only are you debugging a system created half a century ago without being able to see or touch it, you're doing it with a 2-day delay to see what your changes do, and using the most absurdly powerful radios just to send signals.

The computer side of things is also even more impressive than this makes it sound. A memory chip failed. On Earth, you'd probably try to figure that out by physically looking at the hardware, and then probing it with a multimeter or an oscilloscope or something. They couldn't do that. They had to debug it by watching the program as it ran and as it tried to use this faulty memory chip and failed in interesting ways. They could interact with it, but only on a 2 day delay. They also had to know that any wrong move and the little control they had over it could fail and it would be fully dead.

So, a malfunctioning computer that you can only interact with at 40 bits per second, that takes 2 full days between every send and receive, that has flaky hardware and was designed more than 50 years ago.

And you explained all of that WITHOUT THE OBNOXIOUS GODDAMNS and FUCKIN SCIENCE AMIRITEs

Oh screw that, that's an emotional post from somebody sharing their reaction, and I'm fucking STOKED to hear about it, can't believe I missed the news!

Finally I can put some take into this. I've worked in memory testing for years and I'll tell you that it's actually pretty expected for a memory cell to fail after some time. So much so that what we typically do is build in redundancy into the memory cells. We add more memory cells than we might activate at any given time. When shit goes awry, we can reprogram the memory controller to remap the used memory cells so that the bad cells are mapped out and unused ones are mapped in. We don't probe memory cells typically unless we're doing some type of in depth failure analysis. usually we just run a series of algorithms that test each cell and identify which ones aren't responding correctly, then map those out.

None of this is to diminish the engineering challenges that they faced, just to help give an appreciation for the technical mechanisms we've improved over the last few decades

50 years is plenty of time for the first memory chip to fail most systems would face total failure by multiple defects in half the time WITH physical maintenance.

Also remember it was built with tools from the 70s. Which is probably an advantage, given everything else is still going

Definitely an advantage. Even without planned obsolescence the olden electronics are pretty tolerant of any outside interference compared to the modern ones.

Do you know how long that has been going on? Because Voyager is pretty old hardware.

Is there a Voyager 1, uh...emulator or something? Like something NASA would use to test the new programming on before hitting send?

Today you would have a physical duplicate of something in orbit to test code changes on before you push code to something in orbit.

Huh. If it survives a few years more, it's a lightday away.

~~They have spare Voyager on Earth for debugging~~

EDIT: or not