Two days later...

this post was submitted on 17 Mar 2025

1394 points (99.7% liked)

Programmer Humor

37227 readers

288 users here now

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

founded 5 years ago

MODERATORS

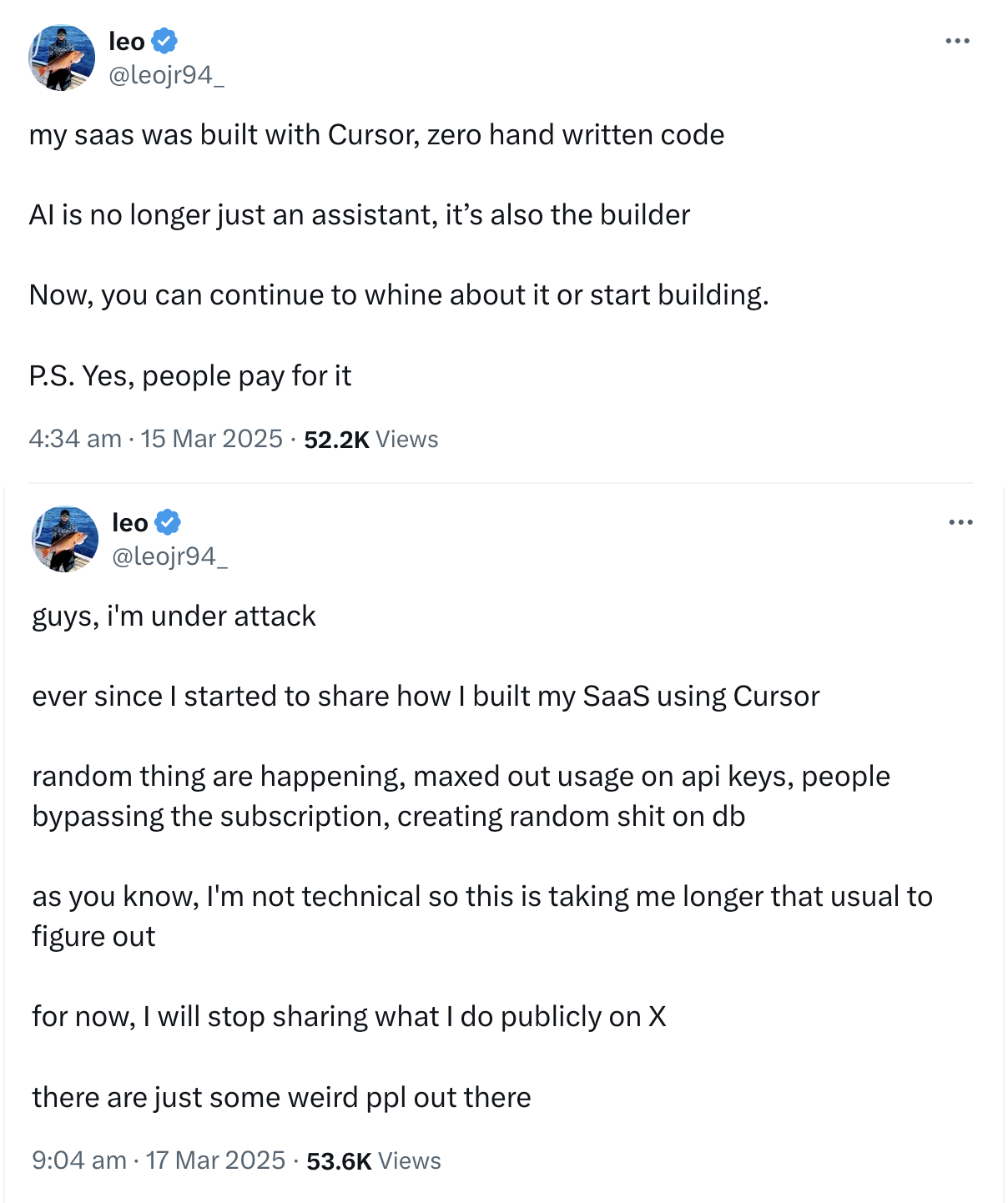

Ok, but what did they try to do as a SaaS?

Money.

But what site is he talking about?

Holy crap, it’s real!

But I thought vibe coding was good actually 😂

Vibe coding is a hilarious term for this too. As if it's not just letting AI write your code.

taste of his own medicine

2 days, LMAO

If I were leojr94, I’d be mad as hell about this impersonator soiling the good name of leojr94—most users probably don’t even notice the underscore.