Bonus points if the attackers use ai to script their attacks, too. We can fully automate the SaaS cycle!

Programmer Humor

Post funny things about programming here! (Or just rant about your favourite programming language.)

Rules:

- Posts must be relevant to programming, programmers, or computer science.

- No NSFW content.

- Jokes must be in good taste. No hate speech, bigotry, etc.

That is the real dead Internet theory: everything from production to malicious actors to end users are all ai scripts wasting electricity and hardware resources for the benefit of no human.

Seems like a fitting end to the internet, imo. Or the recipe for the Singularity.

Not only internet. Soon everybody will use AI for everything. Lawyers will use AI in court on both sides. AI will fight against AI.

I was at a coffee shop the other day and 2 lawyers were discussing how they were doing stuff with ai that they didn't know anything about and then just send to their clients.

That shit scared the hell out of me.

And everything will just keep getting worse with more and more common folk eating the hype and brainwash using these highly incorrect tools in all levels of our society everyday to make decisions about things they have no idea about.

I'm aware of an effort to get LLM AI to summarize medical reports for doctors.

Very disturbing.

The people driving it where I work tend to be the people who know the least about how computers work.

The Internet will continue to function just fine, just as it has for 50 years. It’s the World Wide Web that is on fire. Pretty much has been since a bunch of people who don’t understand what Web 2.0 means decided they were going to start doing “Web 3.0” stuff.

The Internet will continue to function just fine, just as it has for 50 years.

Sounds of intercontinental data cables being sliced

AI is yet another technology that enables morons to think they can cut out the middleman of programming staff, only to very quickly realise that we're more than just monkeys with typewriters.

We're monkeys with COMPUTERS!!!

Hilarious and true.

last week some new up and coming coder was showing me their tons and tons of sites made with the help of chatGPT. They all look great on the front end. So I tried to use one. Error. Tried to use another. Error. Mentioned the errors and they brushed it off. I am 99% sure they do not have the coding experience to fix the errors. I politely disconnected from them at that point.

What's worse is when a noncoder asks me, a coder, to look over and fix their ai generated code. My response is "no, but if you set aside an hour I will teach you how HTML works so you can fix it yourself." Never has one of these kids asking ai to code things accepted which, to me, means they aren't worth my time. Don't let them use you like that. You aren't another tool they can combine with ai to generate things correctly without having to learn things themselves.

100% this. I've gotten to where when people try and rope me into their new million dollar app idea I tell them that there are fantastic resources online to teach yourself to do everything they need. I offer to help them find those resources and even help when they get stuck. I've probably done this dozens of times by now. No bites yet. All those millions wasted...

I've been a professional full stack dev for 15 years and dabbled for years before that - I can absolutely code and know what I'm doing (and have used cursor and just deleted most of what it made for me when I let it run)

But my frontends have never looked better.

Ha, you fools still pay for doors and locks? My house is now 100% done with fake locks and doors, they are so much lighter and easier to install.

Wait! why am I always getting robbed lately, it can not be my fake locks and doors! It has to be weirdos online following what I do.

"If you don't have organic intelligence at home, store-bought is fine." - leo (probably)

The fact that “AI” hallucinates so extensively and gratuitously just means that the only way it can benefit software development is as a gaggle of coked-up juniors making a senior incapable of working on their own stuff because they’re constantly in janitorial mode.

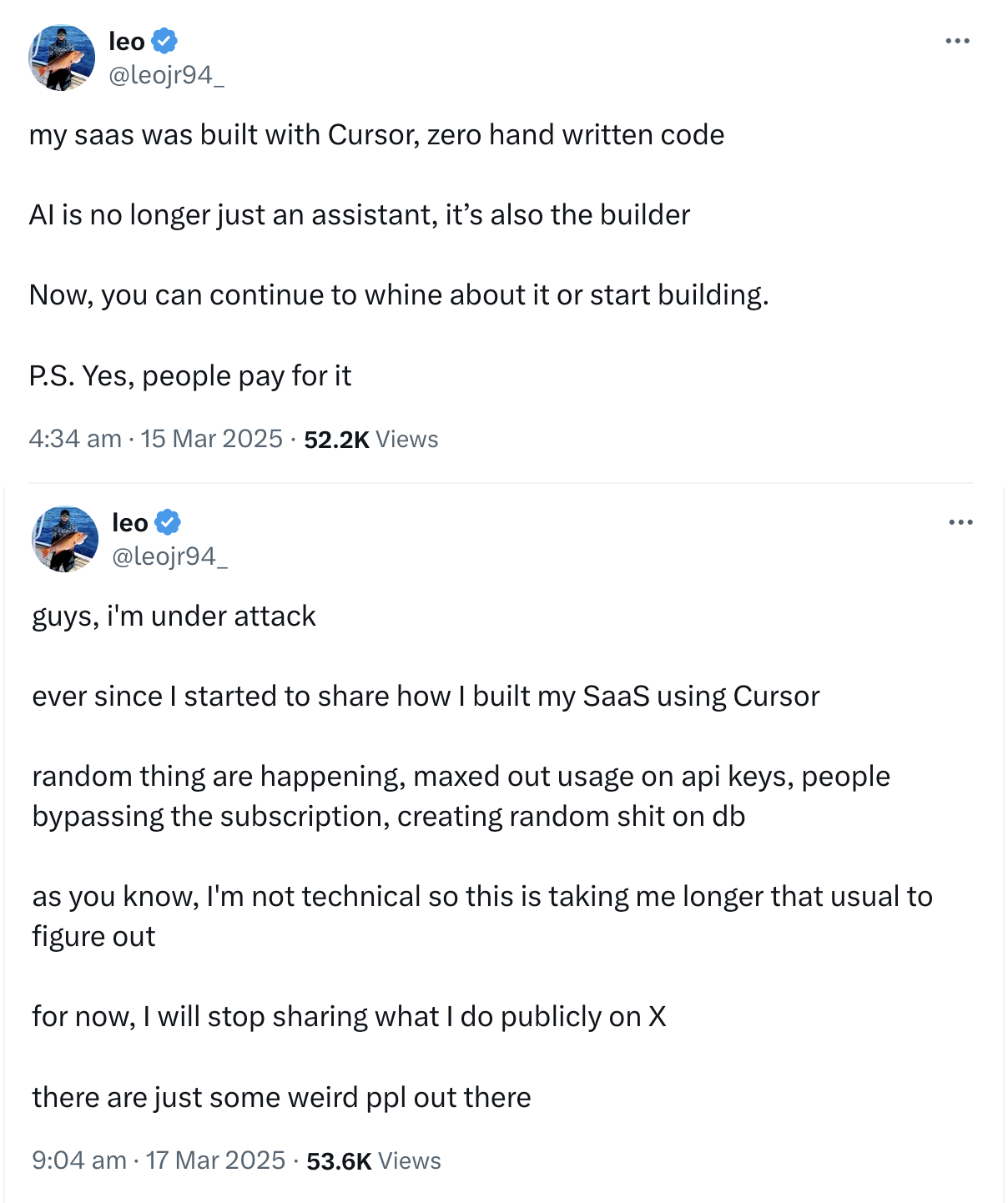

Is the implication that he made a super insecure program and left the token for his AI thing in the code as well? Or is he actually being hacked because others are coping?

Nobody knows. Literally nobody, including him, because he doesn't understand the code!

Nah the people doing the pro bono pen testing know. At least for the frontend side and maybe some of the backend.

AI writes shitty code that's full of security holes, and Leo here has probably taken zero steps to further secure his code. He broadcasts his AI written software and its open season for hackers.

Yes, yes there are weird people out there. That's the whole point of having humans able to understand the code be able to correct it.

Chatgpt make this code secure against weird people trying to crash and exploit it ot

beep boop

fixed 3 bugs

added 2 known vulnerabilities

added 3 race conditions

boop beeb

Reminds me of the days before ai assistants where people copy pasted code from forums and then you’d get quesitions like “I found this code and I know what every line does except this ‘for( int i = 0; i < 10; i ++)’ part. Is this someone using an unsupported expression?”

An otherwise meh article concluded with "It is in everyone’s interest to gradually adjust to the notion that technology can now perform tasks once thought to require years of specialized education and experience."

Much as we want to point and laugh - this is not some loon's fantasy. This is happening. Some dingus told spicy autocomplete 'make me a database!' and it did. It's surely as exploit-hardened as a wet paper towel, but it functions. Largely as a demonstration of Kernighan's law.

This tech is borderline miraculous, even if it's primarily celebrated by the dumbest motherfuckers alive. The generation and the debugging will inevitably improve to where the machine is only as bad at this as we are. We will be left with the hard problem of deciding what the software is supposed to do.

I hope this is satire 😭

This feels like the modern version of those people who gave out the numbers on their credit cards back in the 2000s and would freak out when their bank accounts got drained.

This is satire / trolling for sure.

LLMs aren't really at the point where they can spit out an entire program, including handling deployment, environments, etc. without human intervention.

If this person is 'not technical' they wouldn't have been able to successfully deploy and interconnect all of the pieces needed.

The AI may have been able to spit out snippets, and those snippets may be very useful, but where it stands, it's just not going to be able to, with no human supervision/overrides, write the software, stand up the DB, and deploy all of the services needed. With human guidance sure, but with out someone holding the AIs hand it just won't happen (remember this person is 'not technical')

idk ive seen some crazy complicated stuff woven together by people who cant code. I've got a friend who has no job and is trying to make a living off coding while, for 15+ years being totally unable to learn coding. Some of the things they make are surprisingly complex. Tho also, and the person mentioned here may do similarly, they don't ONLY use ai. They use Github alot too. They make nearly nothing themself, but go thru github and basically combine large chunks of code others have made with ai generated code. Somehow they do it well enough to have done things with servers, cryptocurrency, etc... all the while not knowing any coding language.

This is what happens when you don't know what your own code does, you lose the ability to manage it, that is precisely why AI won't take programmer's jobs.

I took a web dev boot camp. If I were to use AI I would use it as a tool and not the motherfucking builder! AI gets even basic math equations wrong!

Was listening to my go-to podcast during morning walkies with my dog. They brought up an example where some couple was using ShatGPT as a couple's therapist, and what a great idea that was. Talking about how one of the podcasters has more of a friend like relationship to "their" GPT.

I usually find this podcast quite entertaining, but this just got me depressed.

ChatGPT is by the same company that stole Scarlett Johansson's voice. The same vein of companies that thinks it's perfectly okay to pirate 81 terabytes of books, despite definitely being able to afford paying the authors. I don't see a reality where it's ethical or indicative of good judgement to trust a product from any of these companies with information.

AI can be incredibly useful, but you still need someone with the expertise to verify its output.

“Come try my software! I’m an idiot, so I didn’t write it and have no idea how it works, but you can pay for it.”

to

“🎵How could this happen to meeeeee🎵”

Eat my SaaS

ITT: "Haha, yah AI makes shitty insecure code!"

Two days later...