This kind of scaling issue is new to Codeberg (a nonprofit free software project), but not to the world. All projects on earth likely went through this at a certain point or will experience it in the future.

When people like me talk about scaling... It's about increasing computing power, distributed storage, replicated databases and so on. There are all kinds of technology available to solve scaling issues. So why, damn, is Codeberg still having performance issues from time to time?

...we face the "worst" kind of scaling issue in my perception. That is, if you don't see it coming (e.g. because the software gets slower day by day, or because you see how the storage pool fill up). Instead, it appears out of the blue.

The hardest scaling issue is: scaling human power.

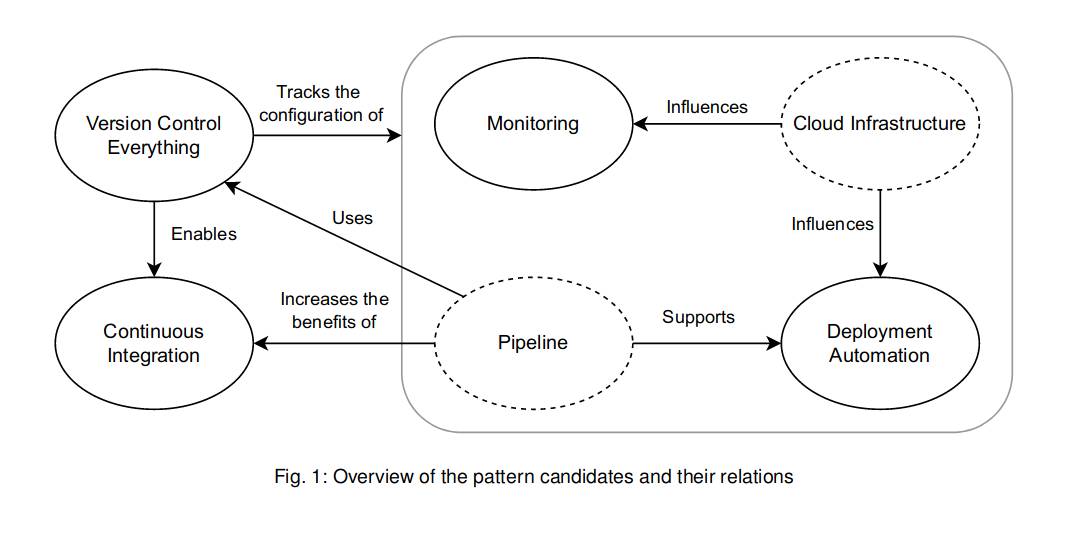

Configuration, Investigation, Maintenance, User Support, Communication – all require some effort, and it's not easy to automate. In many cases, automation would consume even more human resources to set up than we have.

There are no paid night shifts, not even payment at all. Still, people have become used to the always-available guarantees, and demand the same from us: Occasional slowness in the evening of the CET timezone? Unbearable!

I do understand the demand. We definitely aim for a better service than we sometimes provide. However, sometimes, the frustration of angry social-media-guys carries me away...

two primary blockers that prevent scaling human resources. The first one is: trust. Because we can't yet afford hiring employees that work on tasks for a defined amount of time, work naturally has to be distributed over many volunteers with limited time commitment... second problem is a in part technical. Unlike major players, which have nearly unlimited resources available to meet high demand, scaling Codeberg's systems...

TLDR: sustainability issues for scaling because Codeberg is a nonprofit with much limited resources, mainly human resources, in face of high demand. Non-paid volunteers do all the work. So needs more people working as volunteers, and needs more money.

just use a community-lead or non-profit foundation lead distro: NixOS (better than silverblue/kinoite in all aspects they try to sell), Arch, or Debian.

For professional usage, you generally go Ubuntu, or some RHEL derivative.