i'll admit, i have thought of making a commie llm (so like, comradegpt? lol) sometime ago

i wonder if i should do it at some point, especially if we're told to learn about ai and neural networking in the uni

Talk about whatever, respecting the rules established by Lemmygrad. Failing to comply with the rules will grant you a few warnings, insisting on breaking them will grant you a beautiful shiny banwall.

A community for comrades to chat and talk about whatever doesn't fit other communities

i'll admit, i have thought of making a commie llm (so like, comradegpt? lol) sometime ago

i wonder if i should do it at some point, especially if we're told to learn about ai and neural networking in the uni

I started crawling the Portuguese version of the MIA and there was definitely enough data there alone to fine tune one (about 30 GB but a lot of it was pdfs). Just be aware that it requires a lot of data preparation and each trial takes a while on civilian hardware, which is why I postponed it. If there's interest here I'd be happy to collaborate.

Also seems like a fun way to read a lot of diverse texts.

Edit: but be aware that LLMs are inherently unreliable and we shouldn't trust them blindly like libs trust chatGPT.

Just be aware that it requires a lot of data preparation and each trial takes a while on civilian hardware, which is why I postponed it.

How long is it? Just so you know, I'm a total newbie at LLM creation/AI/Neural Networking.

Edit: but be aware that LLMs are inherently unreliable and we shouldn’t trust them blindly like libs trust chatGPT.

So... if they're inherently unreliable, why make them? Genuine question.

How long is it? Just so you know, I’m a total newbie at LLM creation/AI/Neural Networking.

I have never done a full run on an LLM training, but on the lab we used to have language models training for like 1-2 weeks making full use of 4 2080s IIRC. Fine tuning is generally faster than training so it'd be around a day or two on that hardware, but I don't have access to it anymore (and don't think it'd be ethical to use it for personal projects either way). On personal hardware I think it would again be back at the week mark. Since it's an iterative process sometimes one might want to either have multiple training runs with different parameters in parallel or repeatedly train to try and solve issues. There are some cloud options with on-demand GPUs but then we'd need to be spending money.

The bulk of the work is actually on making sure the data is appropriate and then validating if the model works correctly, which a lot of researchers tend to skimp on in their papers, and in practice is usually done by low paid interns or MTurk contractors.

So… if they’re inherently unreliable, why make them? Genuine question.

Cynical answer, stock exchange hype. Investors get really interested whenever we get human-looking stuff like text, voice, walking robots and synthetic flesh, even if those things have very little ROI on the technical side. Just look at people talking about society being managed by AI in the future despite most investment going into human-facing systems rather than logistics optimisations.

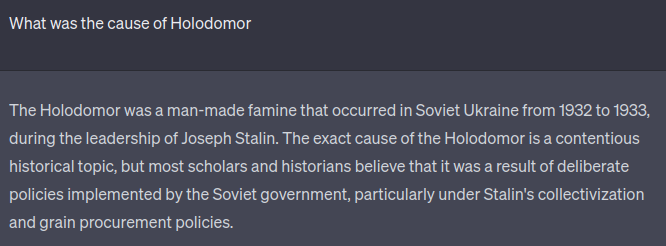

The main issue (incredibly oversimplified) with ChatGPT is that due to it working on a text probability level, it can sometimes create some really convincing and human-sounding text, that is either completely false or contains subtle misrepresentations. It also has a lot of trouble providing accurate sources for what it says. Or it can mimic what appears like "human memory" by referring to previously said things, but that's just emergent behaviour and you can easily "convince" it that it has said things it had not, for instance. Also the data can get so large that some stuff that shouldn't be there can get in there as well, like how ChatGPT 3 is supposed to have a knowledge cut-off in September 2021, but it can sometimes answer questions about the war in Ukraine.

ChatGPT can still be useful for bouncing ideas around, getting some broad overviews, text recommendations or creative writing experimentation. They're also fun to dunk on if you're bored on the bus. I think this would be a fun project, but if do it, we should always have a big red disclaimer that goes "this bot is dumb sometimes, actually read a book."

Here's an example of how bad chatGPT is at sources. Bing has direct access to the internet and can sometimes fetch sources, but I'm not sure how that works and if it is feasible with our non-Microsoft-level resources.

CW libshit

turns out OpenAI isn't actually open

It was actually started for completely open (Free) AI stuff but then they realised it wasn't making any money. Also Musk was involved with it, I think he's been ousted though. Hard to know with the guy who bought the founder title in Tesla.

this is why we need gommusim

can sometimes answer questions about the war in Ukraine.

I thought the war started in 2014?

Well yes, but the one it talked about was the SMO. Ukraine didn't exist to libs before then. I tried replicating it and they seem to have fixed it now, but the other day I also managed to get it to talk about the Wagner "rebellion" so there's definitely some data leakage in there.

I say do it!

commiebot ftw.

i wish you good fortune in such endeavours

o7

https://twitter.com/aakashg0/status/1667756466944770048

China made one called InternLM.

Guess what subjects it performs best on?

Mao culture and Marxism.

LLMs output what you put in.

In this case, the Chinese made a communist AI.

That looks fantastic, we could nudge the developers a bit and they'll likely know how to help out. Sadly I don't know any Chinese (yet), but nothing is too much of a hurdle for big nerds. The model is so big that HuggingFace was having trouble building it, but people can try it out here now. The English responses are alright and we could probably even just host it somewhere.

Pinging people who showed interest here: @rjs001@lemmygrad.ml, @Alunyanners@lemmygrad.ml, @comradeRichard@lemmygrad.ml, @RedWizard@lemmygrad.ml.

I think that would be awesome, I'm still learning a lot and that could be helpful.

I do also believe that my understanding of communism and my place in it calls for a lot more interpersonal connections than I cultivate 🥴

I've been messing with bard a little seeing what questions it will answer and how those answers line up with what I've been learning from lately and how I've been fed a steady diet of propaganda in US schools... And it's easy to see from the questions I've asked that it SEEMS to have been trained on a good bit of factual info but clearly from the lens of "liberal democracy=good" even as far as acknowledging that many conflicts in my life, if asked about the situations, as I've come to understand them, that happened initially destabilizing certain areas, had "fear of communism" high in the list of reasons. Obviously it's a program trained on certain information, and with guardrails installed. If asked about how much devastation the US has caused related to fearing communism it will talk about that, but if you ask for example "why has the US supported fascists" I got a road block and it stopped answering lmao.

Obviously just anecdotal about me playing with an existing program, and I'd love to see one trained on a large variety of different leftist theory and writings. It would still need to be used with the broad understanding that in the end, it's just a program that takes input and processes it and spits some info out, and shouldn't be trusted more than pointing us in directions for more study.

I think it would be an interesting project for sure.

hah yeah, i was trying to talk to another ML about this - explicitly having a gpt4 bot or something like chatbot but with communist leaning and sources to multiple points like books and etc

IIRC Microsoft and Google are both working on a citation-based LLM

Is the first character in your display name an amogus?

( ͡° ͜ʖ ͡°)

sus