At this point I would set up a new account for her - I’ve found Youtube’s algorithm to be very… persistent.

No Stupid Questions

No such thing. Ask away!

!nostupidquestions is a community dedicated to being helpful and answering each others' questions on various topics.

The rules for posting and commenting, besides the rules defined here for lemmy.world, are as follows:

Rules (interactive)

Rule 1- All posts must be legitimate questions. All post titles must include a question.

All posts must be legitimate questions, and all post titles must include a question. Questions that are joke or trolling questions, memes, song lyrics as title, etc. are not allowed here. See Rule 6 for all exceptions.

Rule 2- Your question subject cannot be illegal or NSFW material.

Your question subject cannot be illegal or NSFW material. You will be warned first, banned second.

Rule 3- Do not seek mental, medical and professional help here.

Do not seek mental, medical and professional help here. Breaking this rule will not get you or your post removed, but it will put you at risk, and possibly in danger.

Rule 4- No self promotion or upvote-farming of any kind.

That's it.

Rule 5- No baiting or sealioning or promoting an agenda.

Questions which, instead of being of an innocuous nature, are specifically intended (based on reports and in the opinion of our crack moderation team) to bait users into ideological wars on charged political topics will be removed and the authors warned - or banned - depending on severity.

Rule 6- Regarding META posts and joke questions.

Provided it is about the community itself, you may post non-question posts using the [META] tag on your post title.

On fridays, you are allowed to post meme and troll questions, on the condition that it's in text format only, and conforms with our other rules. These posts MUST include the [NSQ Friday] tag in their title.

If you post a serious question on friday and are looking only for legitimate answers, then please include the [Serious] tag on your post. Irrelevant replies will then be removed by moderators.

Rule 7- You can't intentionally annoy, mock, or harass other members.

If you intentionally annoy, mock, harass, or discriminate against any individual member, you will be removed.

Likewise, if you are a member, sympathiser or a resemblant of a movement that is known to largely hate, mock, discriminate against, and/or want to take lives of a group of people, and you were provably vocal about your hate, then you will be banned on sight.

Rule 8- All comments should try to stay relevant to their parent content.

Rule 9- Reposts from other platforms are not allowed.

Let everyone have their own content.

Rule 10- Majority of bots aren't allowed to participate here. This includes using AI responses and summaries.

Credits

Our breathtaking icon was bestowed upon us by @Cevilia!

The greatest banner of all time: by @TheOneWithTheHair!

Unfortunately, it's linked to the account she uses for her job.

You can make "brand accounts" on YouTube that are a completely different profile from the default account. She probably won't notice if you make one and switch her to it.

You'll probably want to spend some time using it for yourself secretly to curate the kind of non-radical content she'll want to see, and also set an identical profile picture on it so she doesn't notice. I would spend at least a week "breaking it in."

But once you've done that, you can probably switch to the brand account without logging her out of her Google account.

I love how we now have to monitor the content the generation that told us "Don't believe everything you see on the internet." watches like we would for children.

We can thank all that tetraethyllead gas that was pumping lead into the air from the 20s to the 70s. Everyone got a nice healthy dose of lead while they were young. Made 'em stupid.

OP's mom breathed nearly 20 years worth of polluted lead air straight from birth, and OP's grandmother had been breathing it for 33 years up until OP's mom was born. Probably not great for early development.

I'm a bit disturbed how people's beliefs are literally shaped by an algorithm. Now I'm scared to watch Youtube because I might be inadvertently watching propaganda.

My personal opinion is that it's one of the first large cases of misalignment in ML models. I'm 90% certain that Google and other platforms have been for years already using ML models design for user history and data they have about him as an input, and what videos should they offer to him as an ouput, with the goal to maximize the time he spends watching videos (or on Facebook, etc).

And the models eventually found out that if you radicalize someone, isolate them into a conspiracy that will make him an outsider or a nutjob, and then provide a safe space and an echo-chamber on the platform, be it both facebook or youtube, the will eventually start spending most of the time there.

I think this subject was touched-upon in the Social Dillema movie, but given what is happening in the world and how it seems that the conspiracies and desinformations are getting more and more common and people more radicalized, I'm almost certain that the algorithms are to blame.

the damage that corporate social media has inflicted on our social fabric and political discourse is beyond anything we could have imagined.

In the google account privacy settings you can delete the watch and search history. You can also delete a service such as YouTube from the account, without deleting the account itself. This might help starting afresh.

Sorry to hear about you mom and good on you for trying to steer her away from the crazy.

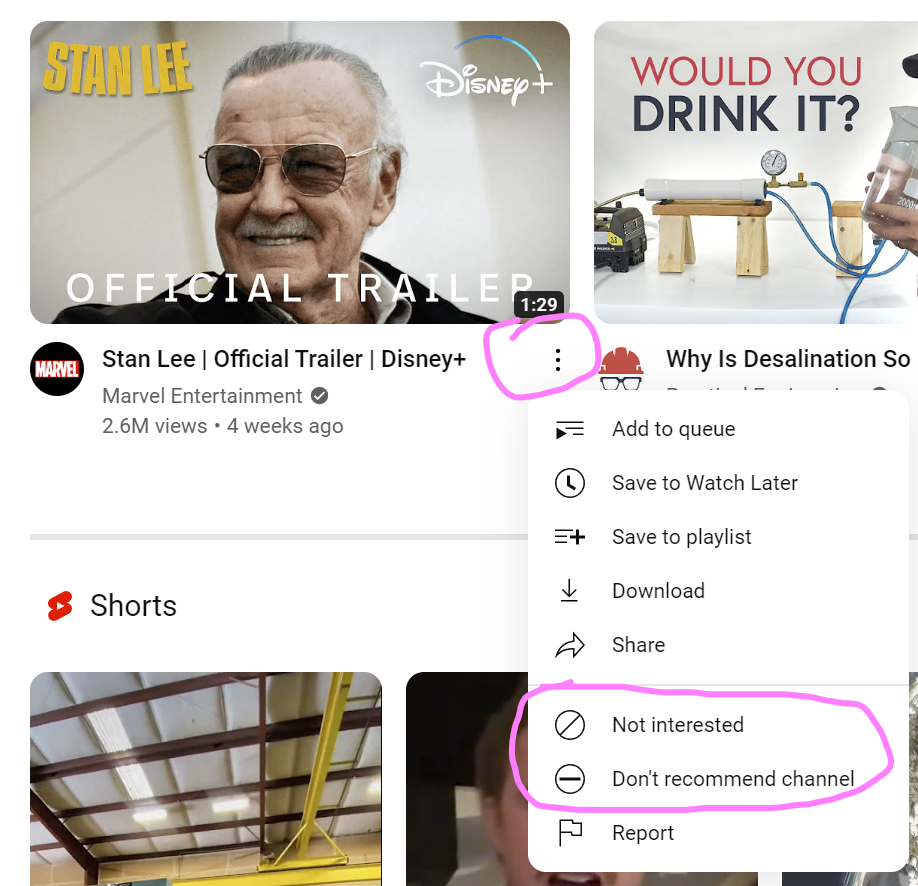

You can retrain YT's recommendations by going through the suggested videos, and clicking the '...' menu on each bad one to show this menu:

(no slight against Stan, he's just what popped up)

click the Don't recommend channel or Not interested buttons. Do this as many times as you can. You might also want to try subscribing/watching a bunch of wholesome ones that your mum might be interested in (hobbies, crafts, travel, history, etc) to push the recommendations in a direction that will meet her interests.

Edit: mention subscribing to interesting, good videos, not just watching.

You might also want to try watching a bunch of wholesome ones that your mum might be interested (hobbies, crafts, travel, history, etc) in to push the recommendations in a direction that will meet her interests.

This is a very important part of the solution here. The algorithm adapts to new videos very quickly, so watching some things you know she's into will definitely change the recommended videos pretty quickly!

Log in as her on your device. Delete the history, turn off ad personalisation, unsubscribe and block dodgy stuff, like and subscribe healthier things, and this is the important part: keep coming back regularly to tell YouTube you don't like any suggested videos that are down the qanon path/remove dodgy watched videos from her history.

Also, subscribe and interact with things she'll like - cute pets, crafts, knitting, whatever she's likely to watch more of. You can't just block and report, you've gotta retrain the algorithm.

Delete all watched history. It will give her a whole new sets of videos. Like a new algorithms.

Where does she watch he YouTube videos? If it's a computer and you're a little techie, switch to Firefox then change the user agent to Nintendo Switch. I find that YouTube serve less crazy videos for Nintendo Switch.

This never worked for me. BUT WHAT FIXED IT WAS LISTENING TO SCREAMO METAL. APPARENTLY THE. YOURUBE KNEW I WAS A LEFTIST then. Weird but I've been free ever since

This comment made me laugh so hard and I don't know why... I love it!

Here is what you can do: make a bet with her on things that she think is going to happen in a certain timeframe, tell her if she's right, then it should be easy money. I don't like gambling, I just find it easier to convince people they might be wrong when they have to put something at stake.

A lot of these crazy YouTube cult channels have gotten fairly insidious, because they will at first lure you in with cooking, travel, and credit card tips, then they will direct you to their affiliated Qanon style channels. You have to watch out for those too.

they will at first lure you in with cooking, travel, and credit card tips

Holy crap. I had no idea. We've heard of a slippery slope, but this is a slippery sheer vertical cliff.

Like that toxic meathead rogan luring the curious in with DMT stories and the like, and this sounds like that particular spore has burst and spread.

Rogan did not invent this, this is the modern version of Scientology's free e-meter reading, and historical cults have always done similar things for recruitment. While you shouldn't be paranoid and suspicious of everyone, but you should know that these things exists and watch out for them.

I think it's sad how so many of the comments are sharing strategies about how to game the Youtube algorithm, instead of suggesting ways to avoid interacting with the algorithm at all, and learning to curate content on your own.

The algorithm doesn't actually care that it's promoting right-wing or crazy conspiracy content, it promotes whatever that keeps people's eyeballs on Youtube. The fact is that this will always be the most enraging content. Using "not interested" and "block this channel" buttons doesn't make the algorithm stop trying to advertise this content, you're teaching it to improve its strategy to manipulate you!

The long-term strategy is to get people away from engagement algorithms. Introduce OP's mother to a patched Youtube client that blocks ads and algorithmic feeds (Revanced has this). "Youtube with no ads!" is an easy way to convince non-technical people. Help her subscribe to safe channels and monitor what she watches.

Not everyone is willing to switch platforms that easily. You can't always be idealistic.

In addition to everything everyone here said I want to add this; don't underestimate the value in adding new benin topics to her feel. Does she like cooking, gardening, diy, art content? Find a playlist from a creator and let it auto play. The algorithm will pick it up and start to recommend that creator and others like it. You just need to "confuse" the algorithm so it starts to cater to different interests. I wish there was a way to block or mute entire subjects on their. We need to protect our parents from this mess.

Do the block and uninterested stuff but also clear her history and then search for videos you want her to watch. Cover all your bases. You can also try looking up debunking and deprogramming videos that are specifically aimed at fighting the misinformation and brainwashing.

My mother‘s partner had some small success, on the one hand doing what you do already, unsubscribe from bad stuff and subscribe to some other stuff she might enjoy (nature channels, religious stuff which isn‘t so toxic, arts and crafts..) and also blocked some tabloid news on the router. On the other hand, he tried getting her outside more, they go on long hikes now and travel around a lot, plus he helped her find some hobbies (the arts and crafts one) and sits with her sometimes showing lots of interest and positive affirmations when she does that. Since religion is so important to her they also often go to (a not so toxic or cultish) church and church events and the choir and so on.

She‘s still into it to an extent, anti-vax will probably never change since she can’t trust doctors and hates needles and she still votes accordingly (which is far right in my country) too which is unfortunate, but she‘s calmed down a lot over it and isn‘t quite so radical and full of fear all the time.

Edit: oh and myself, I found out about a book "How to have impossible conversations" and the topics in there can be weird, but it helped me in staying somewhat calm when confronted with outlandish beliefs and not reinforce, though who knows how much that helps if anything (I think her partner contributed a LOT more than me).

Reading this makes me realize I'm not alone as I thought. My mother too has gone completely out of control in the last 3 years with every sort of plot theory (freemasonry, 5 families ruling the world, radical anti-vax, this Pope is not the real Pope, EU is to blame for anything, etc.). I manage to "agree to disagree" most of the time but it's though sometimes... People, no matter their degree of education, love simple explanations to complex problems and an external scapegoat for their failures. These contents will always have an appeal.

Yeah you are not alone at all, there is an entire subreddit for this r/qanoncasualties and while my mother doesn‘t know it, a lot of this stuff comes from this Q thing. Like why else would a person like her in Austria care so much about Trump or Hillary Clinton or a guy like George Soros? Also a lot of it is thinly veiled borderline Nazi stuff (it‘s always the jews or other races or minority groups at fault), which is the next thing, she says she hates Nazis and yet they got her to vote far right and support all sorts of agendas. There is some mental trick happening there where "the left is the actual Nazis".

Well she was always a bit weird with religion, aliens, wonder cures and other conspiracy stuff, but it really got turned towards these political aims in the last years. It feels malicious and it was distressing to me, though I‘m somewhat at peace with it now, the world is a crazy place and out of my control, I just try to do the best with what I can affect.

I've found that if I remove things from my history, it stops suggesting related content, hopefully that helps.

youtube has a delete option that will wipe the recorded trend. then just watch a couple of videos and subscribe to some healthy stuff.

As you plan on messing with her feed, I'd like to warn you that a sudden change in her recommendations could seem to her like the whole internet got censored and she can't see the truth anymore. She would be cut off from a sense of community and a sense of having special inside knowledge, and that may make things worse rather than better.

My non-proffessional prediction is that she would get bored with nothing to worry about and start actively seeking out bad news to worry over.

I’d like to warn you that a sudden change in her recommendations could seem to her like the whole internet got censored and she can’t see the truth anymore.

This is exactly the response my neighbors have. "Things get censored, so that must mean that the gov must be hiding this info" It's truly insane to see this happen

You can manage all recommendations by going to account > Your data in YouTube

From there you can remove the watch history and search history or disable them entirely. It should make it a lot easier to reset the recommendations.

Go into the viewing history and wipe it. Then maybe view some more normal stuff to set it on a good track. That should give a good reset for her, though it wouldn't stop her from just looking it up again, of course.

if you have access to her computer instead of just her account you could try installing the extension channel block (firefox | chrome). with that you can both block individual channels so that videos from these channels will NEVER EVER show up in sidebar recommendations, search results, subscription feeds, etc. you can also set up regex queries to block videos by title eg. the query "nuke*" will stop video recommendations if the title of the video contains "nuke, "nuclear", or any other word that starts with "nuke"

I curate my feed pretty often so I might be able to help.

The first, and easiest, thing to do is to tell Youtube you aren't interested in their recommendations. If you hover over the name of a video then three little dots will appear on the right side. Clicking them opens a menu that contains, among many, two options: Not Interested and Don't Recommend Channel. Don't Recommend Channel doesn't actually remove the channel from recommendations but it will discourage the algorithm from recommending it as often. Not Interested will also inform the algorithm that you're not interested, I think it discourages the entire topic but it's not clear to me.

You can also unsubscribe from channels that you don't want to see as often. Youtube will recommend you things that were watched by other people who are also subscribed to the same channels you're subscribed to. So if you subscribe to a channel that attracts viewers with unsavory video tastes then videos that are often watched by those viewers will get recommended to you. Unsubscribing will also reduce how often you get recommended videos by that content creator.

Finally, you should watch videos you want to watch. If you see something that you like then watch it! Give it a like and a comment and otherwise interact with the content. Youtube knows when you see a video and then go to the Channel's page and browse all their videos. They track that stuff. If you do things that Youtube likes then they will give you more videos like that because that's how Youtube monetizes you, the user.

To de-radicalize your mom's feed I would try to

- Watch videos that you like on her feed. This introduces them to the algorithm.

- Use Not Interested and Don't Recommend Channel to slowly phase out the old content.

- Unsubscribe to some channels she doesn't watch a lot of so she won't notice.

HEAVY use of "Don't Recommend Channel" is essential to make YouTube tolerable. Clobber all the garbage.

Delete her youtube history entirely. Rebuild the search algo from scratch.

In googles settings you can turn off watch history and nuke whats there, which should affect recommendations to a degree. I recommend you export her youtube subscriptions to people she likes (that arent qanon), set up an account on an invidious instance, and import those subs. This has the benefit of no ads and no tracking/algorithm making. The recommendations can be turned off too I think. Freetube for desktop and newpipe for andrioid are great 3rd party youtube scrapers. The youtube subs can also be imported to a RSS feed client.

Make her a new account, pre-train it with some wholesome stuff and explicitly block some shit channels.

Oof that’s hard!

You may want to try the following though to clear the algorithm up

Clear her YouTube watch history: This will reset the algorithm, getting rid of a lot of the data it uses to make recommendations. You can do this by going to “History” on the left menu, then clicking on “Clear All Watch History”.

Clear her YouTube search history: This is also part of the data YouTube uses for recommendations. You can do this from the same “History” page, by clicking “Clear All Search History”.

Change her ‘Ad personalization’ settings: This is found in her Google account settings. Turning off ad personalization will limit how much YouTube’s algorithms can target her based on her data.

Introduce diverse content: Once the histories are cleared, start watching a variety of non-political, non-conspiracy content that she might enjoy, like cooking shows, travel vlogs, or nature documentaries. This will help teach the algorithm new patterns.

Dislike, not just ignore, unwanted videos: If a video that isn’t to her taste pops up, make sure to click ‘dislike’. This will tell the algorithm not to recommend similar content in the future.

Manually curate her subscriptions: Unsubscribe from the channels she’s not interested in, and find some new ones that she might like. This directly influences what content YouTube will recommend.

First unsub from worst channels and report a few of the worst channels in general feed with "Don't show me this anymore". Then go into actual Google profile settings, not just YouTube. Delete and Pause/Off Watch History and even web history. It will still eventually creep back up, but temp relief.

I listen to podcasts that talk about conspiratorial thinking and they tend to lean on compassion for the person who is lost. I don't think that you can brow-beat someone into not believing this crap, but maybe you can reach across and persuade them over a lot of time. Here are two that might be useful (the first is on this topic specifically, the second is broader):

https://youarenotsosmart.com/transcript-qanon-and-conspiratorial-narratives/ https://www.qanonanonymous.com

I wish you luck and all the best. This stuff is awful.

Switch her to FreeTube on desktop. Can still subscribe to keep a list of channels she likes, but won't get the YouTube algorithm recommendations on the home page.

For mobile something like newpipe for the same algorithm removed experience.

This is a suggestion with questionable morality BUT a new account with reasonable subscriptions might be a good solution. That being said, if my child was trying to patronize me about my conspiracy theories, I wouldn't like it, and would probably flip if they try to change my accounts.

you can try selecting "don't recommend this channel" on some of the more radical ones!

I just want to share my sympathies on how hard it must be when she goes and listens to those assholes on YouTube and believes them but won't accept her family's help telling her what bullshit all that is.

I hope you get her out of that zone op.

Greasemonkey with scripts that block key words or channels. This is the only real option next to taking away YouTube from the old bat.