(also; an album: https://imgur.com/a/H4EZCdV - changed the topic link to show the render, for obvious visibility. Album includes node-setups for geonodes and the gist of what I use for most materials)

Hi all, I'm an on/off blender hobbyist, started this "project" as a friend of mine baited me a bit to this, so I went with it. The idea is to make a "music video" of sorts. Gloomy music, camera fly/walkhrough of a spoopy house, all that cheesy stuff.

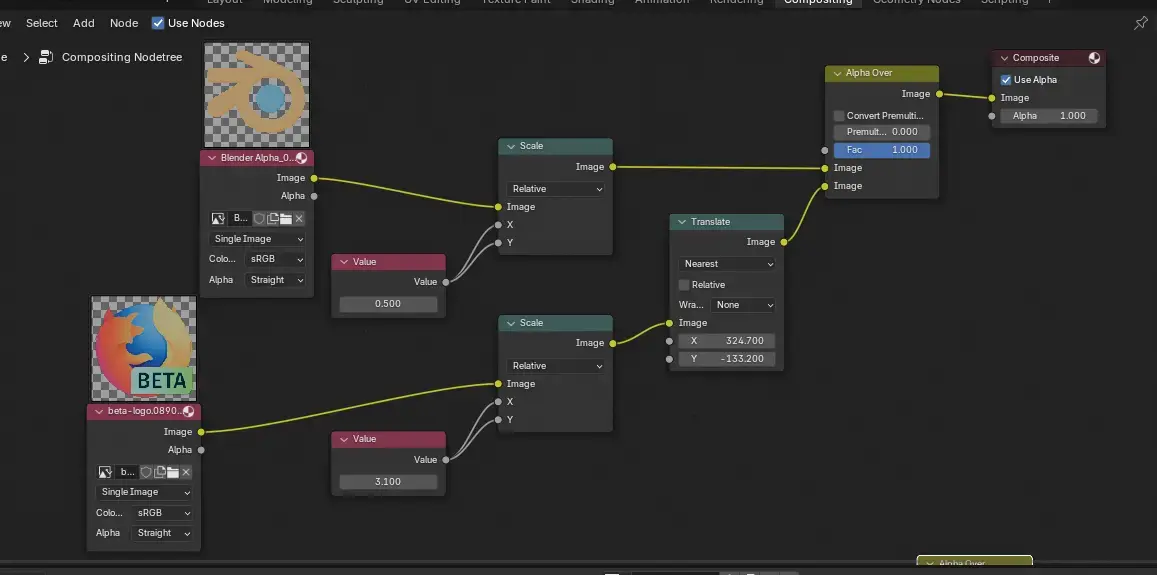

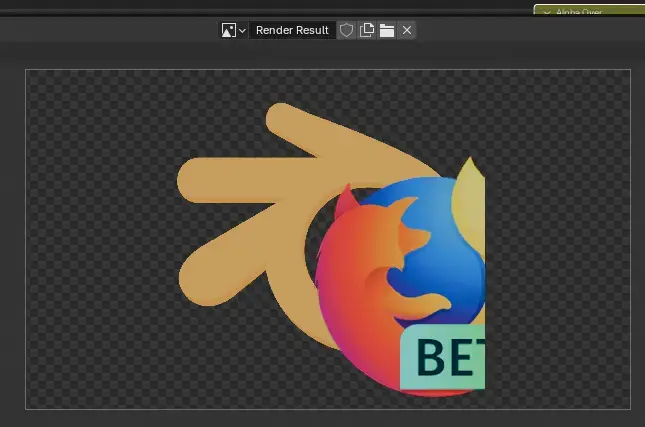

It started basically with the geonodes (as shown in the imgur album) - basically it's a simple thing that generates walls/floorlists around a floor-mesh and applies given materials to them, nothing fancy but it allows me to quickly prototype the building layout.

The scene uses some assets from blendswap:

- https://blendswap.com/blend/14139 - furniture, redid the materials as they were way too bright for the direction I intended to go. But the modeling on the furniture is top notch, if a bit lowpoly but nothing a subsurf mod. can't fix.

- https://blendswap.com/blend/25115 - the dinner on the table. Also tweaked the material quite a bit, the initial one was way too shiny and lacked SSS.

Thanks to the authors of these blendswaps <3

edit: the images on paintings on the wall are some spooky paintings I found on google image search, but damn it I can't recall the search term to give props. I'm a failure.

The source for the wall/floor textures are lost to time, I've had these like a decade on my stash. Wish I could make these on my own :/

zstd is generally stupidly fast and quite efficient.

probably not exactly how steam does it, or even close, but as a quick & dirty comparison: compressed and decompressed a random CD.iso (~375 MB) I had laying about, using zstd and lzma, using 1MB dictitionary:

test system: Arch linux (btw, as is customary) laptop with AMD Ryzen 7 PRO 7840U cpu.

used commands & results:

Zstd:

So, pretty quick all around.

Lzma:

This one felt like forever to compress.

So, my takeaway here is that the time cost to compress is enough to waste a bit of disk space for sake of speed.

and lastly, just because I was curious, ran zstd on max compression settings too:

~11s compression time, ~0.5s decompression, archive size was ~211 MB.

deemed it wasn't nescessary to spend time to compress the archive with lzma's max settings.

Now I'll be taking notes when people start correcting me & explaining why these "benchmarks" are wrong :P

edit:

goofed a bit with the max compression settings, added the same dictionary size.

edit 2: one of the reasons for the change might be syncing files between their servers. IIRC zstd can be compressed to be "rsync compatible", allowing partial file syncs instead of syncing entire file, saving in bandwidth. Not sure if lzma does the same.