DefendingAIArt is a subreddit run by mod "Trippy-Worlds," who also runs the debate sister subreddit AIWars. Some poking around made clear that AIWars is perfectly fine with having overt Nazis around, for example a guy with heil hitler in his name who accuses others of lying because they are "spiritually jewish." So we're off to a great start.

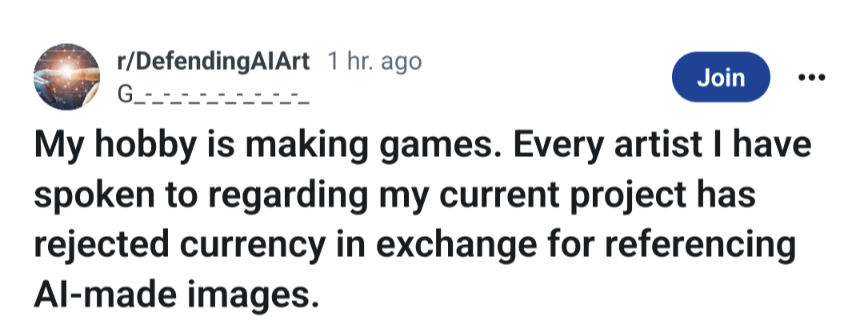

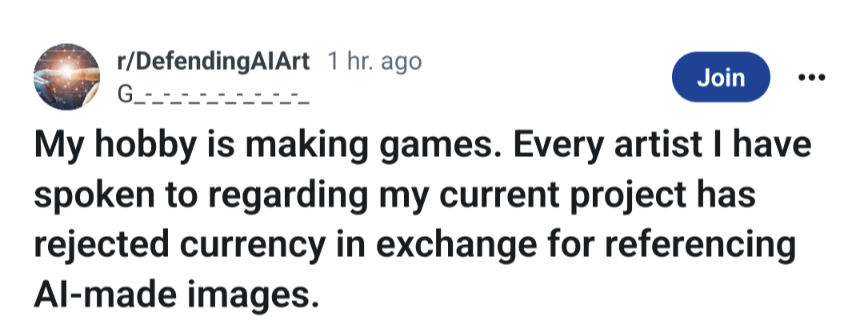

the first thing that drew my eye was this post from a would be employer:

not really clear what the title means, but this person seems to have had a string of encounters with the most based artists of all time.

also claims to have been called "racial and gender slurs" for using ai art and that he was "kicked out of 20 groups" and some other things. idk what to tell this guy, it legitimately does suck that wealthy people have the money to pay for lots of art and the rest of us don't

I really enjoyed browsing around this subreddit, and a big part of that was seeing how much the stigma around AI gets to people who want to use it. pouring contempt on this stuff is good for the world

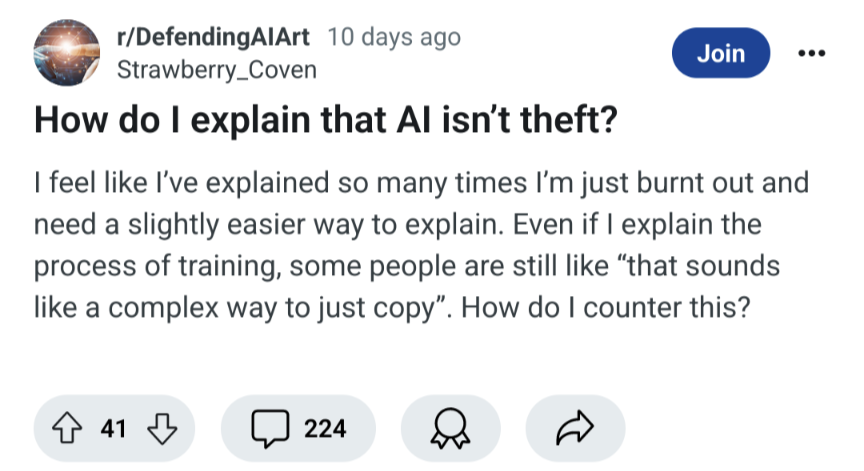

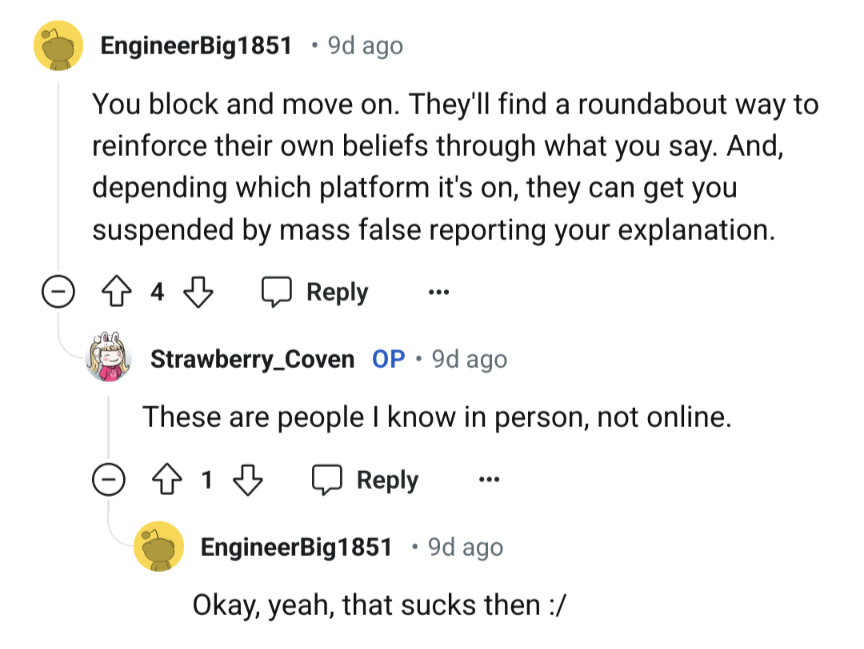

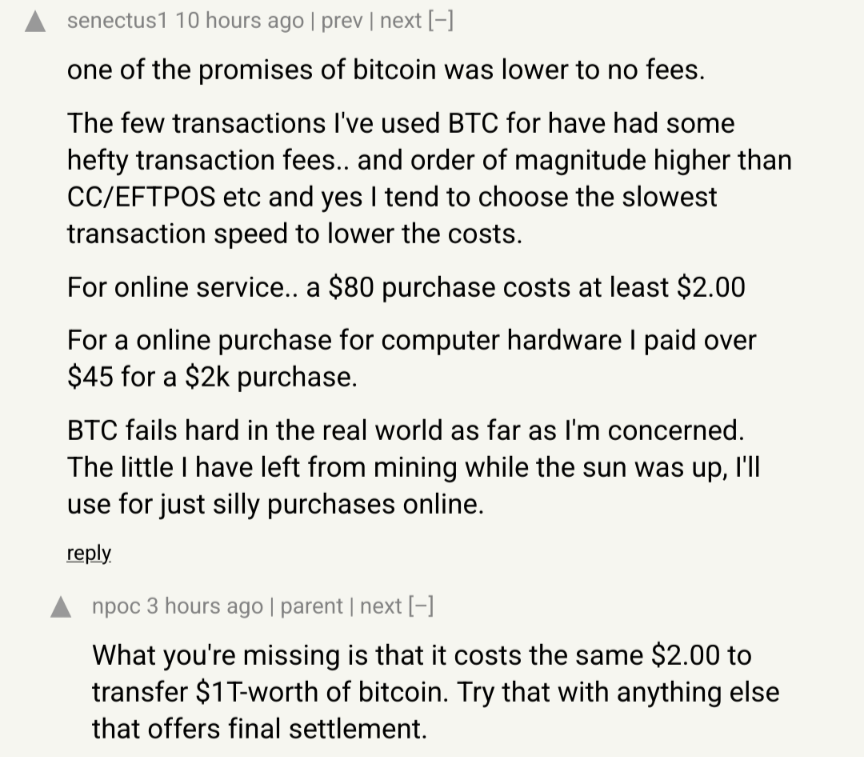

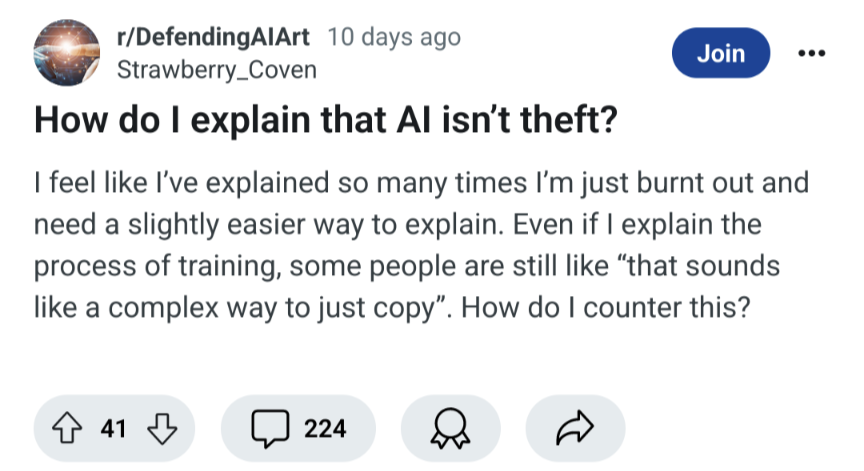

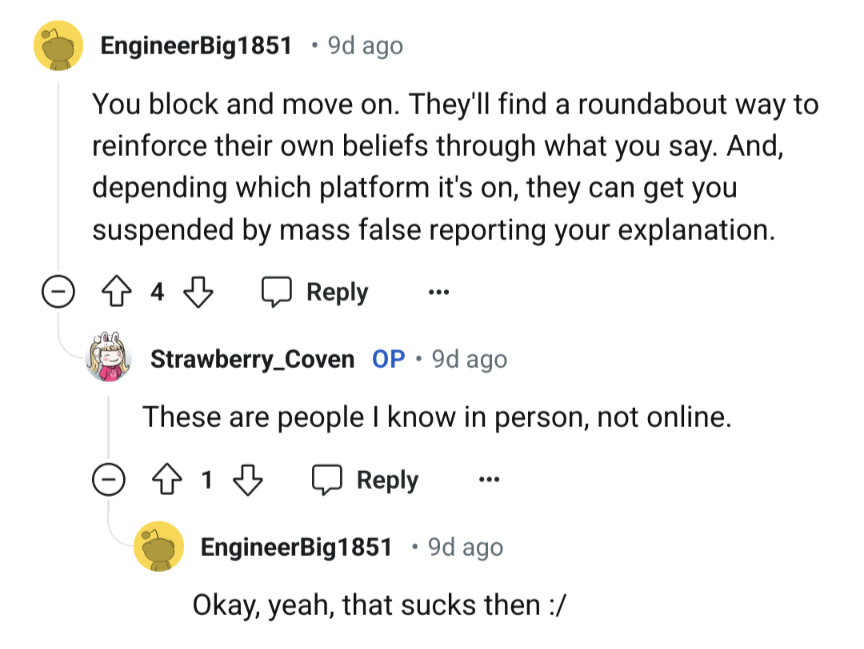

the above guy would like to know what combination of buttons to press to counter the "that just sounds like stealing from artists" attack. a commenter leaps in to help and immediately impales himself:

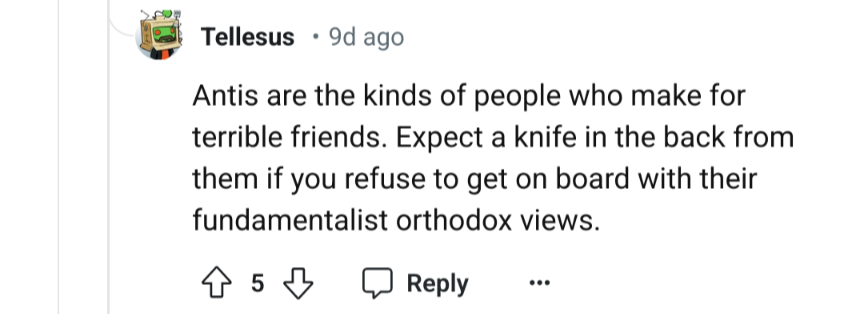

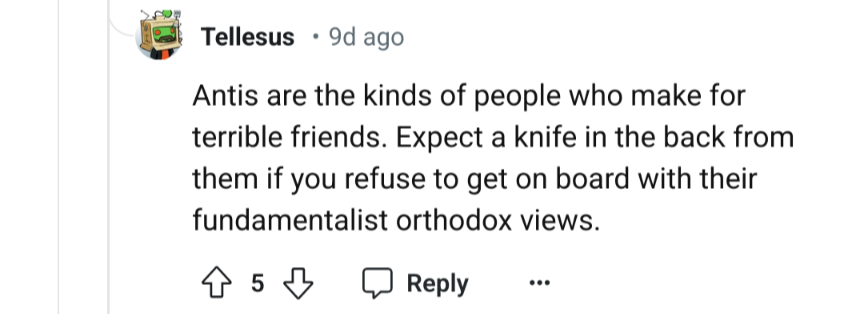

you hate to see it. another commenter points out that well ... maybe these people just aren't your friends

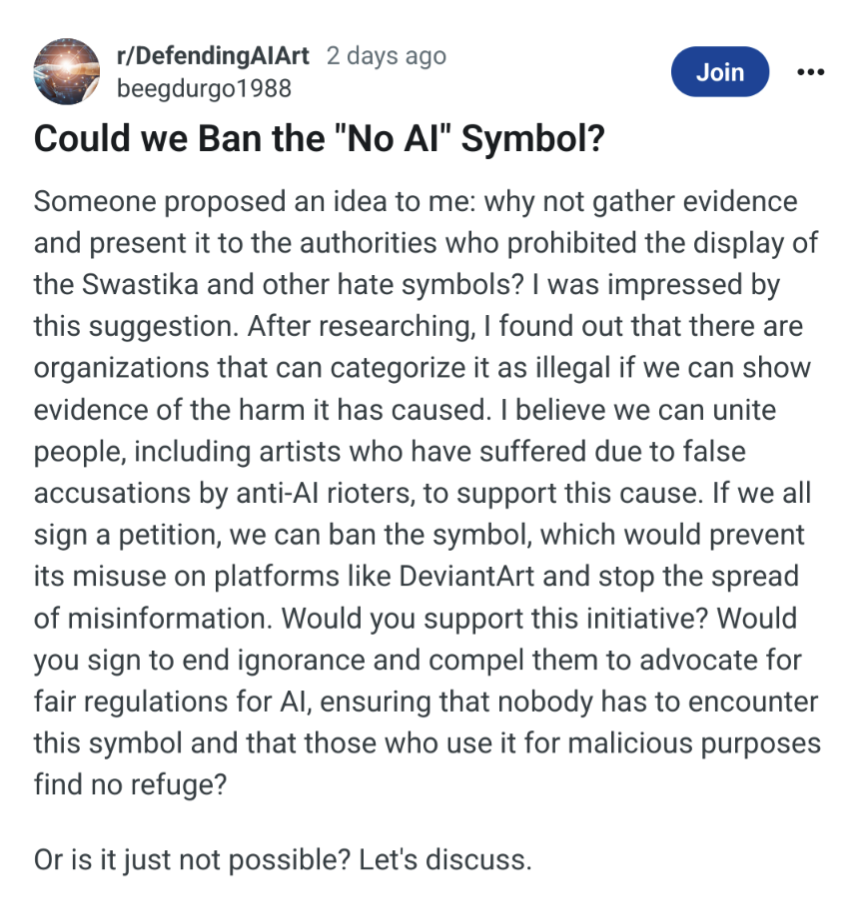

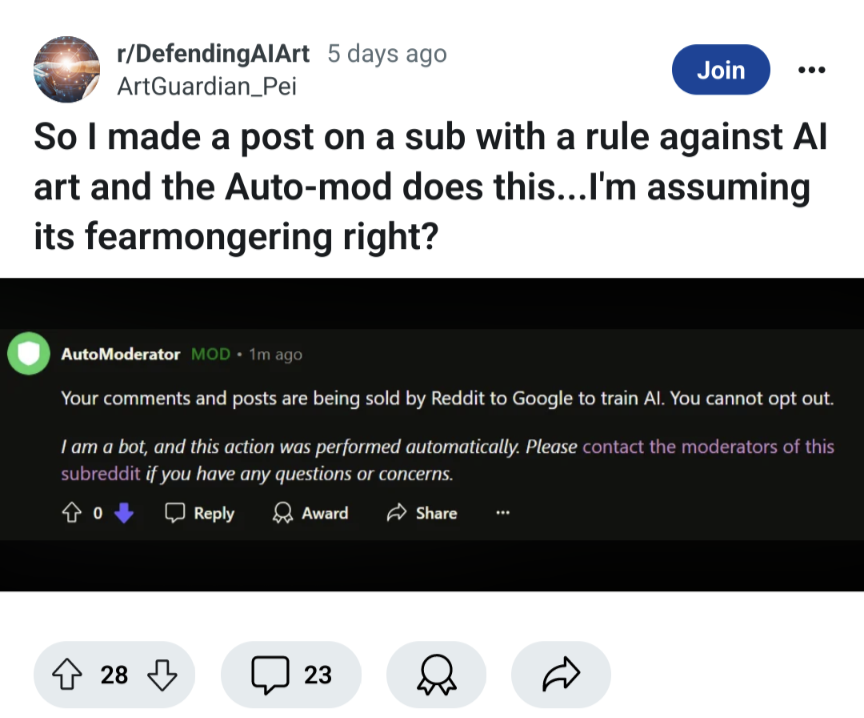

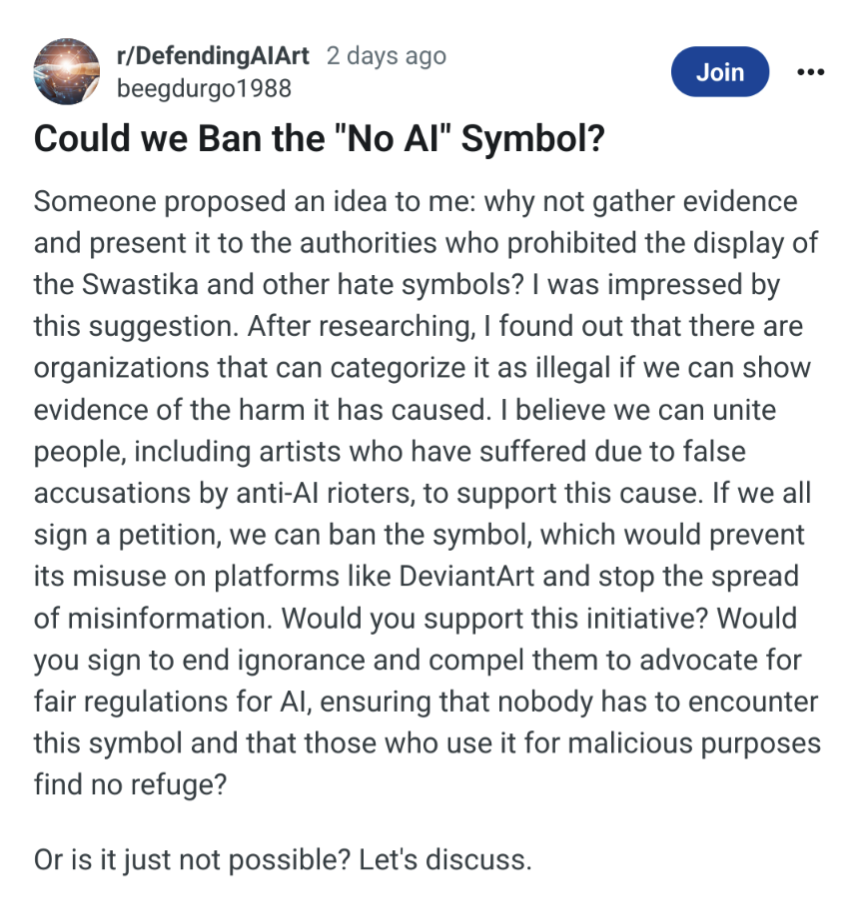

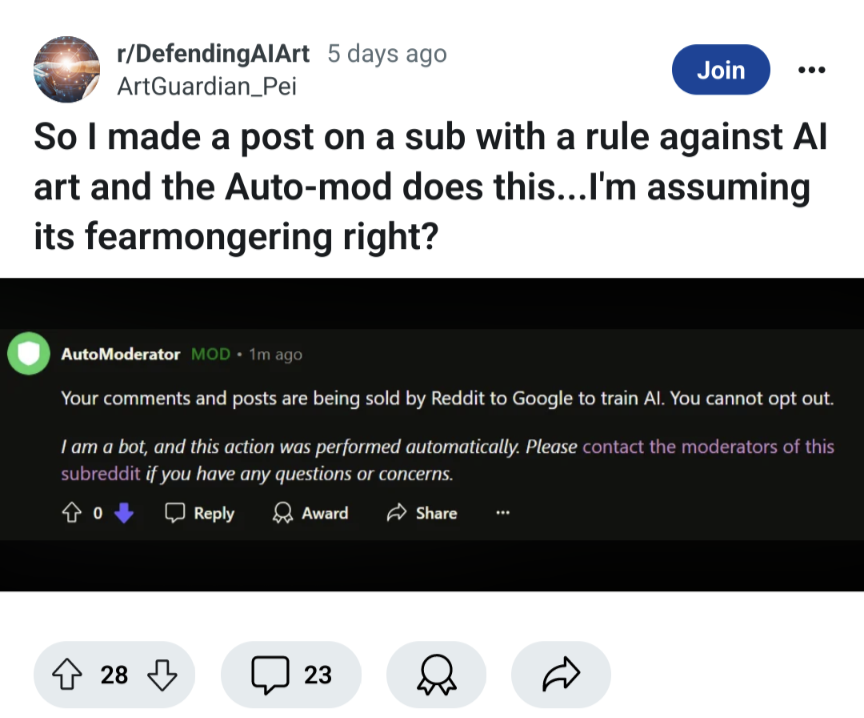

to close out, an example of fearmongering:

I don't see any reason being trained on writing informed by correct knowledge would cause it to be correct frequently. unless you're expecting it to just verbatim lift sentences from training data