There has to be soy milk or almond milk or something else that could be used.

If you have a gun, never get within knife range.

Definitely in scope for the community, though.

The true measure of a man is whether or not he's been bitten by a radioactive spider.

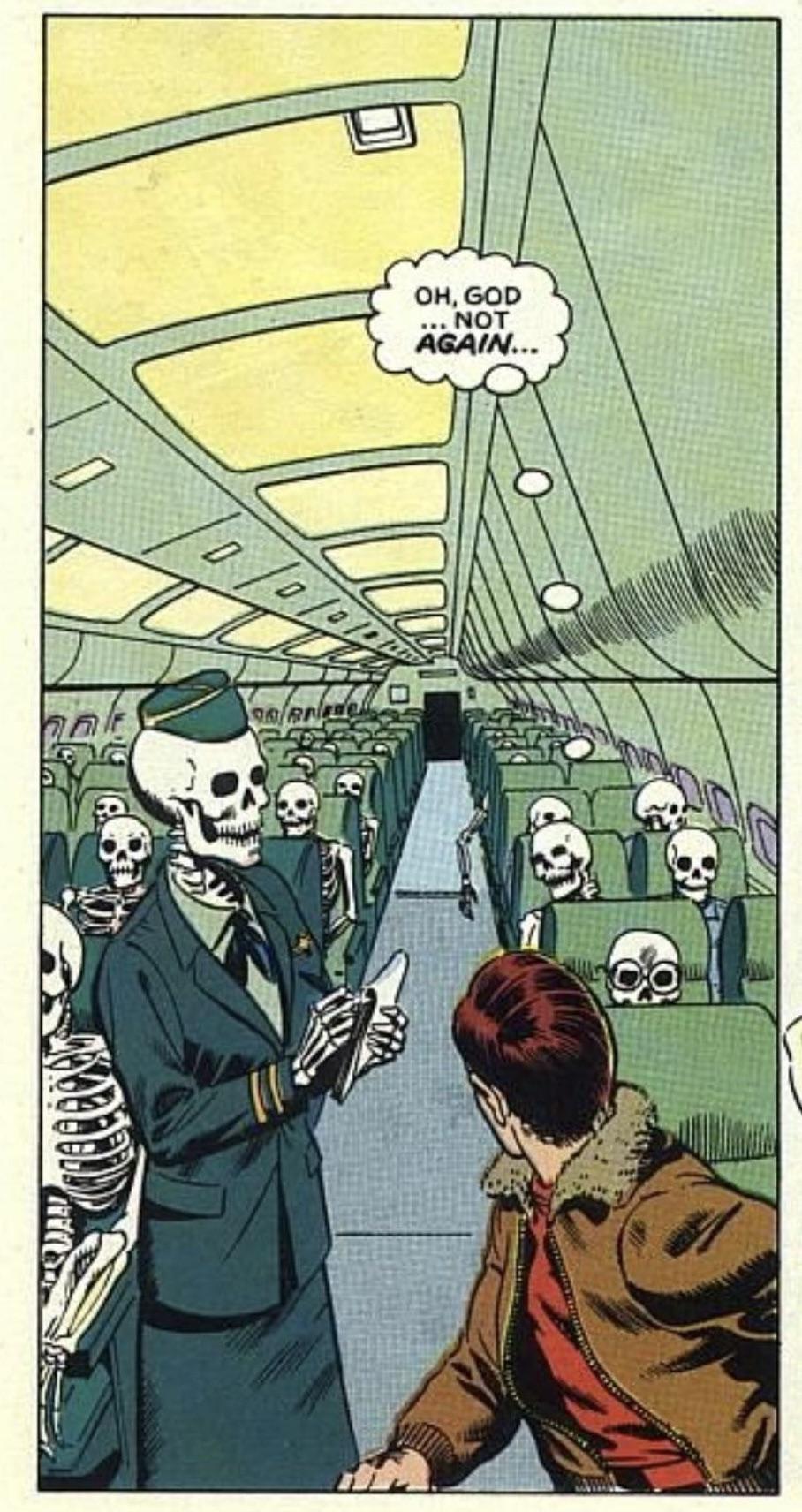

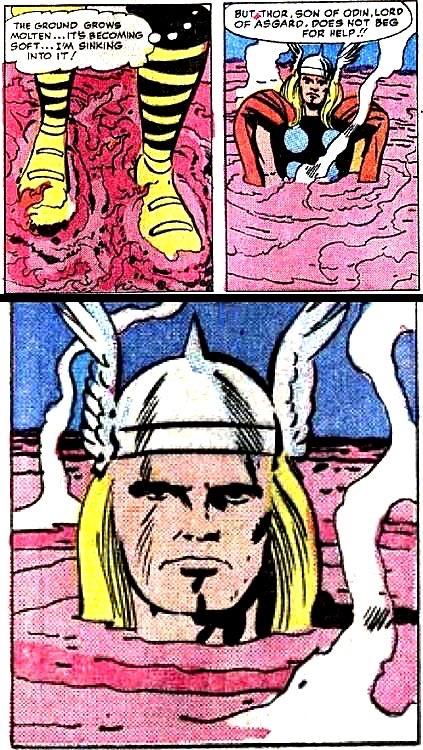

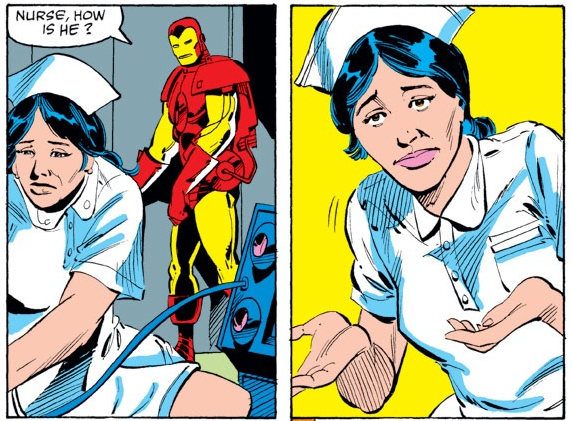

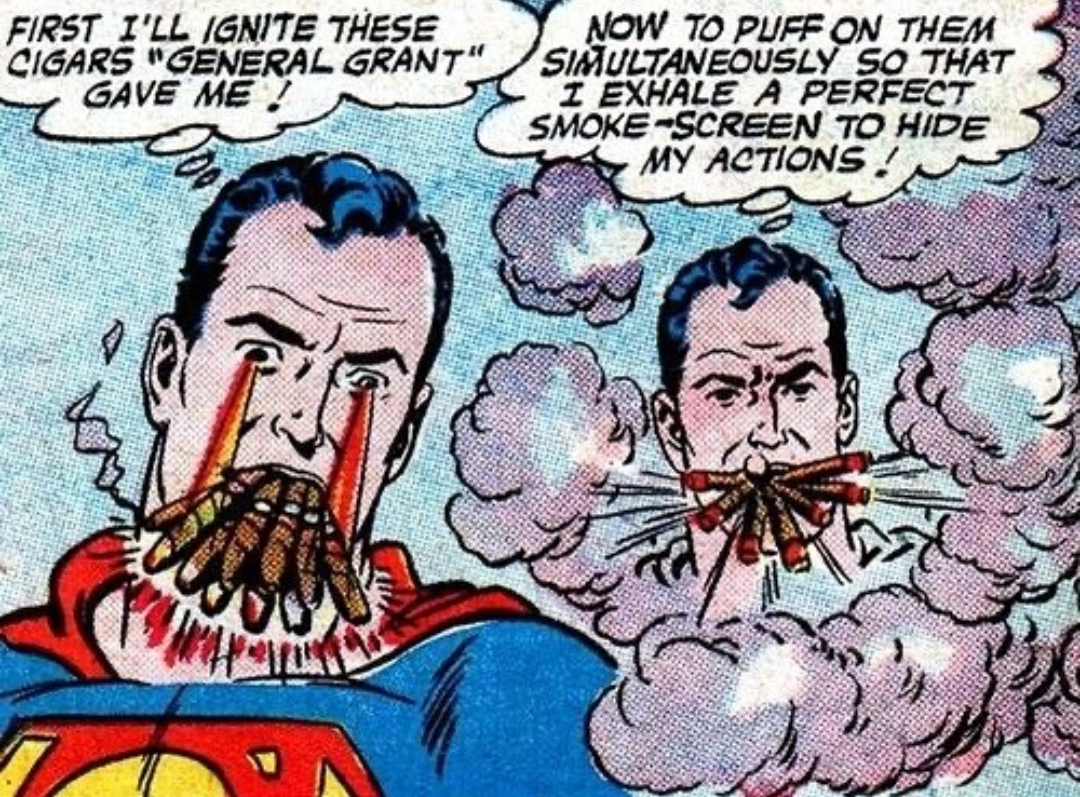

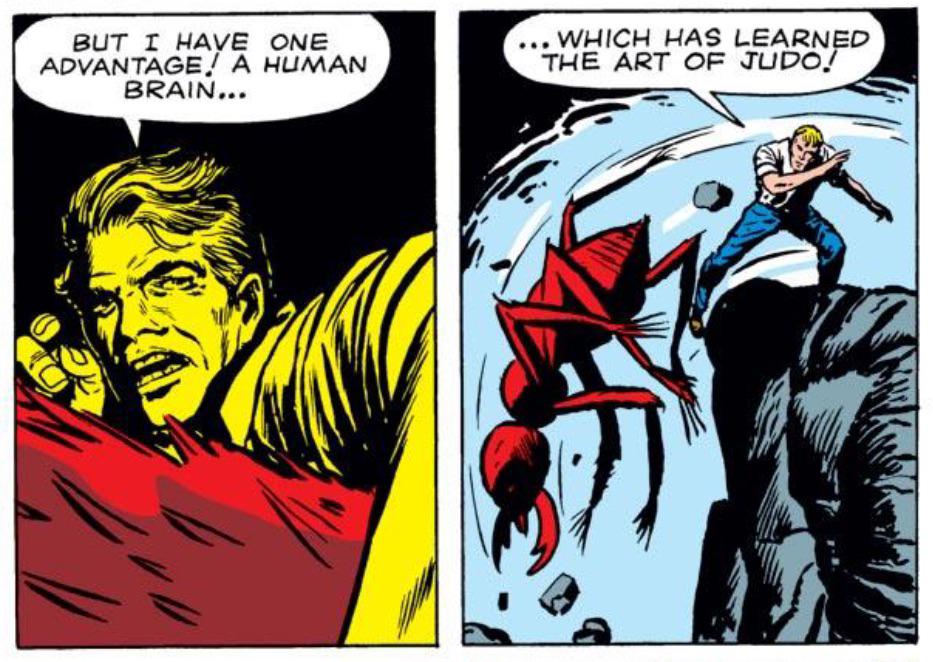

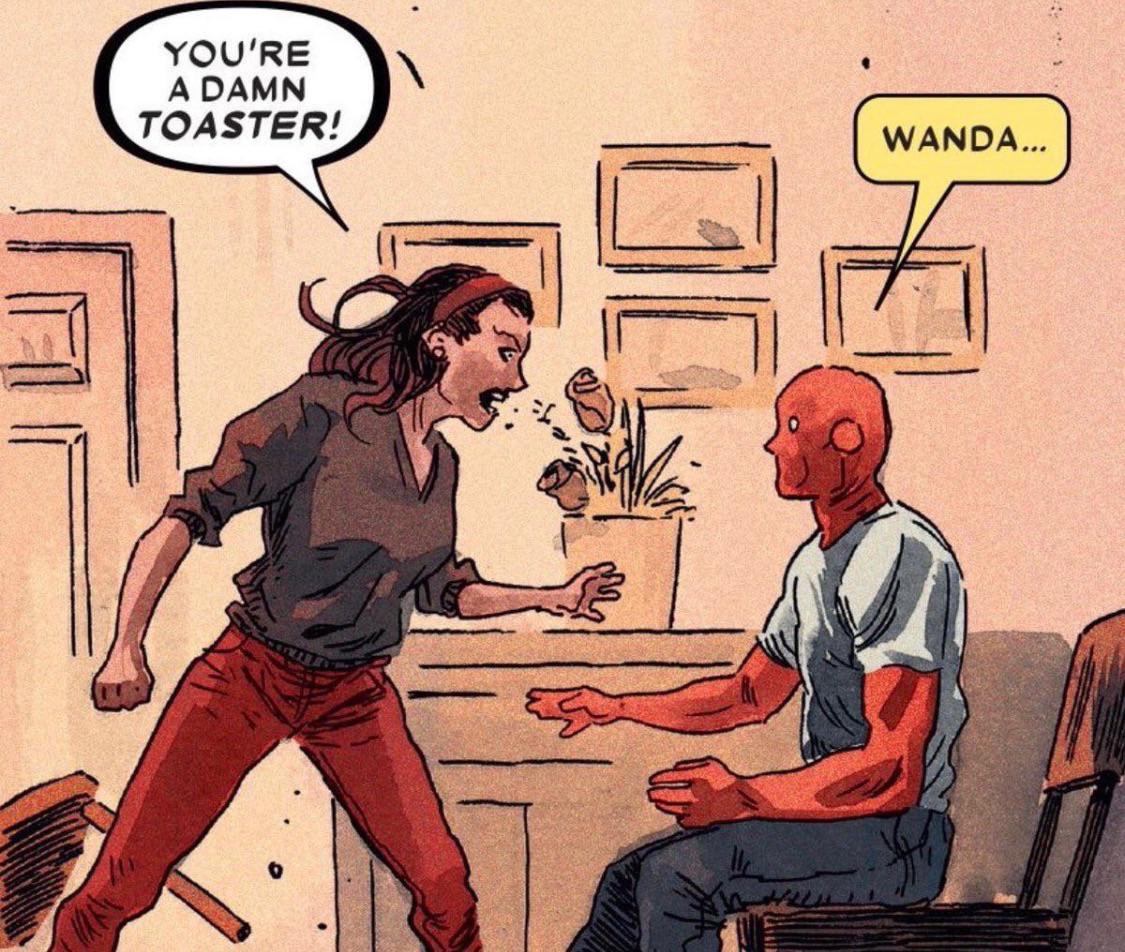

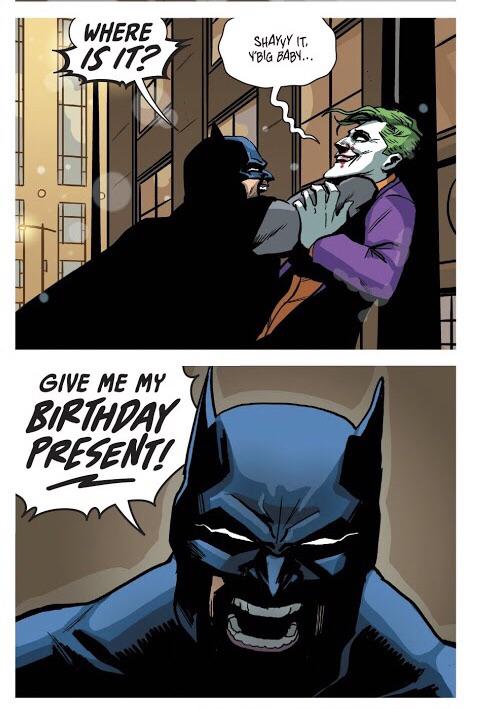

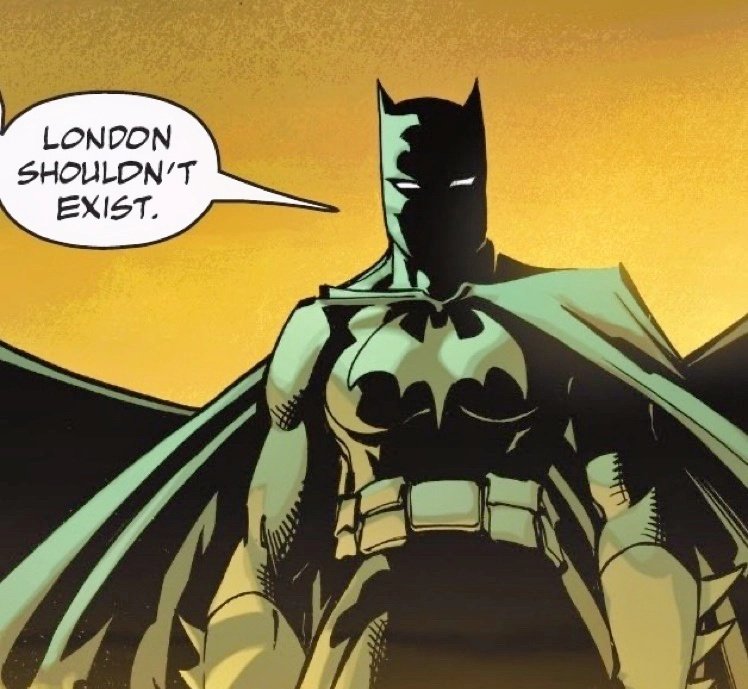

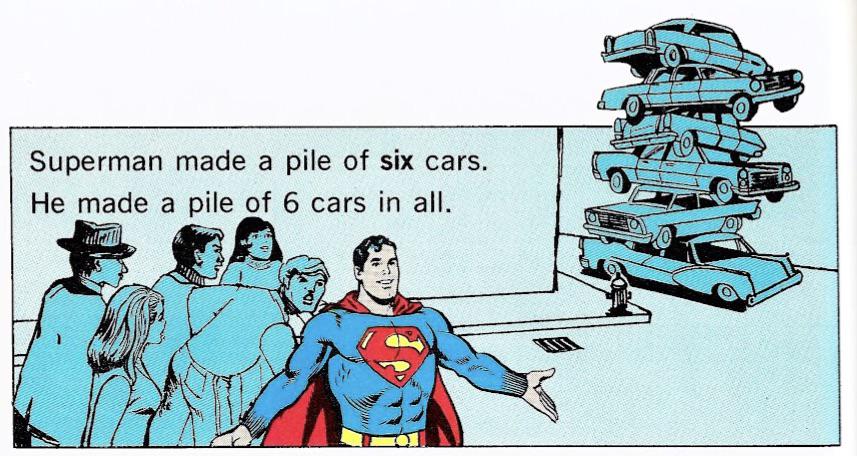

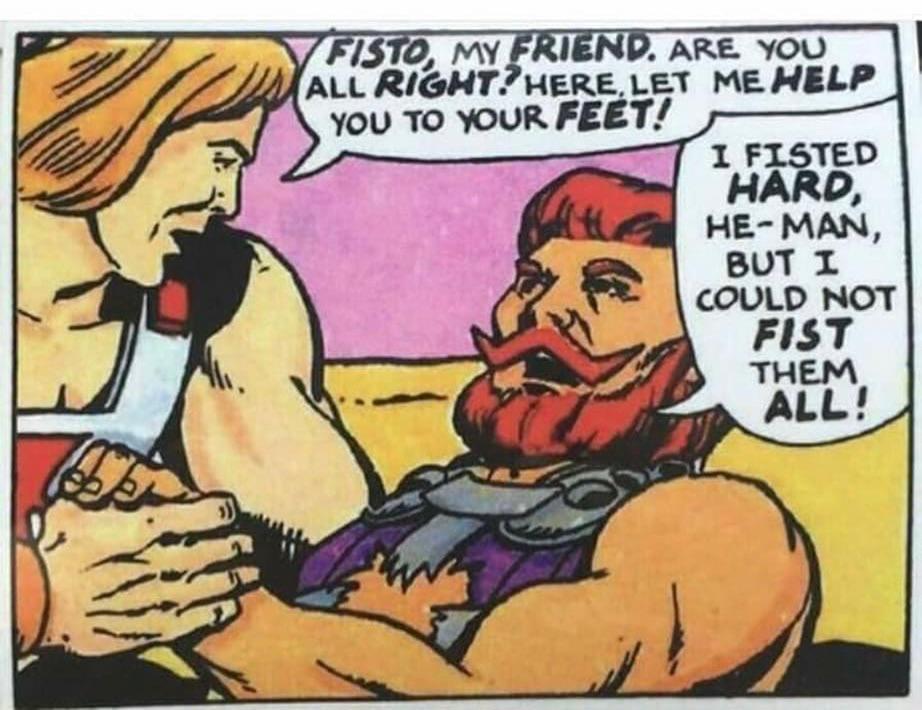

If Wonder Woman doing over-the-top BDSM qualifies, then there are some even more prime examples on /r/outofcontextcomics I see that I think I'll submit.

Upon investigation, apparently there is an /r/outofcontextcomics on Reddit with years of submissions which can be sorted by top score and pillaged for comics.

No problem. I don't mind trying to help if you get stuck -- ComfyUI is more complicated to get going with than Automatic1111, especially if you're not familiar with how the generation works internally, as ComfyUI kind of exposes you to the inner workings more -- but I just don't want to try rewriting the zillion YouTube and webpages that cover the "here is how to get ComfyUI installed" process out there. I don't even know what OS you're using, and some of that setup is OS-specific.

Context (which I had to look up, since I haven't played Homefront):

https://en.wikipedia.org/wiki/Homefront_%28video_game%29

Homefront is a first-person shooter video game developed by Kaos Studios and published by THQ. The game tells the story of a resistance movement fighting in the near-future against the military occupation of the Western United States by a reunified Korea.

So, I'm not going to write up a "this is how to install and start ComfyUI and view it in a Web browser" tutorial -- like, lots of people have done that a number of times over online, and better than I could, and it'd just be duplicating effort, probably badly. You'll want to look at them to get ComfyUI up and running, if you have not yet.

My understanding is that if you install ComfyUI and load a workflow -- which I've provided -- and you're missing any nodes or models used in that workflow, ComfyUI will tell you. That's why I attach the workflow JSON file, so that people can use that to reproduce the environment (and should be able to reproduce this image exactly).

The workflow is compressed with xz and then converted to text for posting here, since the Threadiverse doesn't do file attachments on posts, with Base64. On a Linux system, you can reconstitute the workflow file by pasting the text in the spoiler section into a file (say, "workflow.json.xz.base64") and running:

$ base64 -d <workflow.json.xz.base64 | xz -d > workflow.json

There's an extension that I use that makes it convenient to download nodes in ComfyUI: ComfyUI Manager

https://github.com/ltdrdata/ComfyUI-Manager

It works kind of like Automatic1111's built-in extension downloader, if you've used that.

I think -- from memory, as I'm on my phone at the moment -- that I'm only using one node that doesn't come with the base package, SD Ultimate Upscaler, which is a port from Automatic1111 to ComfyUI of an upscaler that breaks the image up into tiles and upscales it using an upscaling model; it lets me render the image at 1260x720 and then just upscale it to the resolution in which it was posted. If you install ComfyUI Manager, you can use that to download and install SD Ultimate Upscaler and whatever else you might want in terms of nodes. Also provides an option to one-click update them when they get new releases.

I don't think that it can automatically download models, though it'll prompt you if you don't have them. I believe -- again going from memory -- that there are two models used here, the NewReality model derived from Flux, and the SwinIR 4x -- I probably should have used the 2x model as I'm scaling by a factor of 2, changed that in my most recent submitted images -- upscaler model.

You can google for either of them using their name, but NewReality is on civitai.com, which you'll need an account on:

https://civitai.com/models/161068/stoiqo-newreality-flux-sd35-sdxl-sd15

There are several versions of the NewReality model, based on various base models. You want to click on the right version, the one based on Flux 1D.Alpha; the default when you go to the page has the SD3.5Alpha version checked. Then click "Download".

That gets dropped into the models/checkpoint directory in the ComfyUI installation.

..I don't remember exactly where I got my copy of the SwinIR upscaler model, think it was from HuggingFace:

This is the homepage of the SwinIR upscaler, should also work, though looks like they use different filenames:

https://github.com/JingyunLiang/SwinIR/releases

The SwinIR_4x upscaler gets dropped into the models/upscaler directory in the ComfyUI installation.

You need to restart ComfyUI after adding new models for it to detect them.

If you haven't used a Flux-based model before, it can be slow, as a warning, compared to SD models that I've used.

If you've never done any local image generation before at all, then you're going to need a GPU with a fair bit of VRAM -- I don't know what the minimum requirements are to run this model, might say on the NewReality model page Civitai site. I use a 24GB card, but I know that it's got some headroom, because I've generated at higher resolution than I am here. Hypothetically, I think one can run ComfyUI on a CPU alone, but it'd be unusably slow.

I haven't looked into any actual decision process, and personally, I'd like to be able to post vector files myself, but there are some real concerns that I suspect apply (and are probably also why other sites, like Reddit, don't provide SVG support).

SVG can contain Javascript, which can introduce security concerns

My guess is that there are resource-exhaustion issues. With a raster format, like, say, PNG, you're probably only going to create issues with very large images, which are easy to filter out -- and the existing system does place limits on that. With a typical, unconstrained vector format like Postscript or SVG, you can probably require an arbitrarily long amount of rendering time from the renderer (and maybe memory usage, dunno, don't know how current renderers work).

At least some SVG renderers support reference of external files. That could permit, to some degree, deanonymizing people who view them -- like, a commenter could see which IP addresses are viewing a comment. That's actually probably a more general privacy issue with Lemmy today, but it can at least theoretically be addressed by modifying Threadiverse server software to rewrite comments and by propagating and caching images, whereas SVG support would bake external reference support in.

I think that an environment that permits arbitrary vector files to be posted would probably require some kind of more-constrained format, or at minimum, some kind of hardened filter on the instance side to block or process images to convert them into a form acceptable for mass distribution by anonymous users.

Note that Lemmy does have support for other format than SVG, including video files -- just not anything vector ATM.

If the art you want to post is flat-color, my guess is that your closest bet is probably posting a raster version of it as PNG or maybe lossless webp.

Can also store an SVG somewhere else that permits hosting SVG and provide an external link to that SVG file.