The part of the study where they talk about how they determined the flawed mathematical formula it used to calculate the glue-on-pizza response was mindblowing.

^(I^ ^did^ ^not^ ^read^ ^the^ ^study.)^

The part of the study where they talk about how they determined the flawed mathematical formula it used to calculate the glue-on-pizza response was mindblowing.

^(I^ ^did^ ^not^ ^read^ ^the^ ^study.)^

cracks? it doesn't even exist. we figured this out a long time ago.

They are large LANGUAGE models. It's no surprise that they can't solve those mathematical problems in the study. They are trained for text production. We already knew that they were no good in counting things.

"You see this fish? Well, it SUCKS at climbing trees."

That's not how you sell fish though. You gotta emphasize how at one time we were all basically fish and if you buy my fish for long enough, those fish will eventually evolve hands to climb!

"Premium fish for sale: GUARANTEED to never climb your trees"

So do I every time I ask it a slightly complicated programming question

And sometimes even really simple ones.

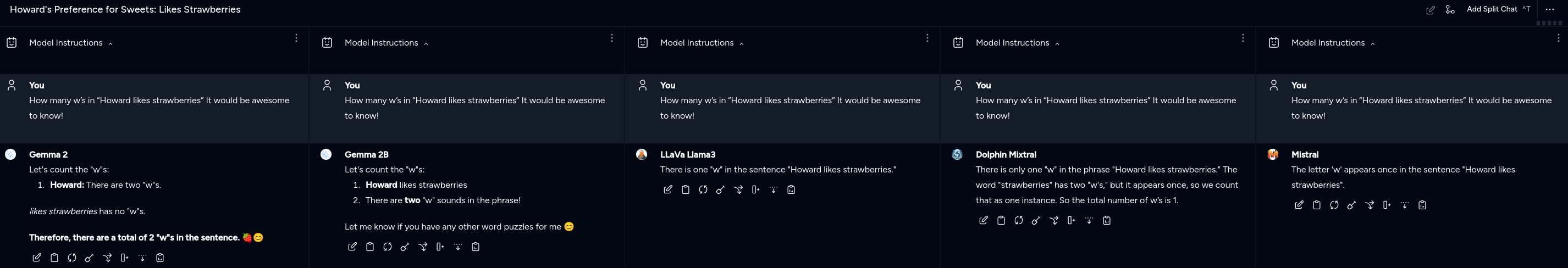

How many w's in "Howard likes strawberries" It would be awesome to know!

So I keep seeing people reference this... And I found it curious of a concept that LLMs have problems with this. So I asked them... Several of them...

Outside of this image... Codestral ( my default ) got it actually correct and didn't talk itself out of being correct... But that's no fun so I asked 5 others, at once.

What's sad is that Dolphin Mixtral is a 26.44GB model...

Gemma 2 is the 5.44GB variant

Gemma 2B is the 1.63GB variant

LLaVa Llama3 is the 5.55 GB variant

Mistral is the 4.11GB Variant

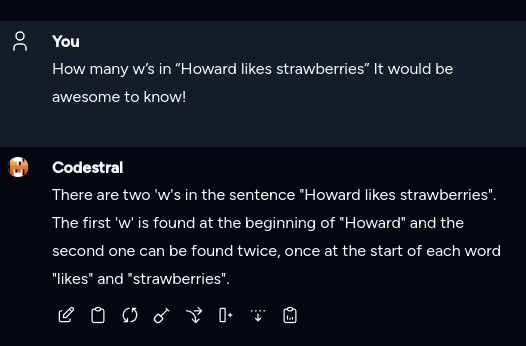

So I asked Codestral again because why not! And this time it talked itself out of being correct...

Edit: fixed newline formatting.

Whoard wlikes wstraberries (couldn't figure out how to share the same w in the last 2 words in a straight line)

Did anyone believe they had the ability to reason?

Like 90% of the consumers using this tech are totally fine handing over tasks that require reasoning to LLMs and not checking the answers for accuracy.

People are stupid OK? I've had people who think that it can in fact do math, "better than a calculator"

Yes

The tested LLMs fared much worse, though, when the Apple researchers modified the GSM-Symbolic benchmark by adding "seemingly relevant but ultimately inconsequential statements" to the questions

Good thing they're being trained on random posts and comments on the internet, which are known for being succinct and accurate.

Yeah, especially given that so many popular vegetables are members of the brassica genus

Definitely true! And ordering pizza without rocks as a topping should be outlawed, it literally has no texture without it, any human would know that very obvious fact.

statistical engine suggesting words that sound like they'd probably be correct is bad at reasoning

How can this be??

I would say that if anything, LLMs are showing cracks in our way of reasoning.

Or the problem with tech billionaires selling "magic solutions" to problems that don't actually exist. Or how people are too gullible in the modern internet to understand when they're being sold snake oil in the form of "technological advancement" when it's actually just repackaged plagiarized material.

The results of this new GSM-Symbolic paper aren't completely new in the world of AI research. Other recent papers have similarly suggested that LLMs don't actually perform formal reasoning and instead mimic it with probabilistic pattern-matching of the closest similar data seen in their vast training sets.

WTF kind of reporting is this, though? None of this is recent or new at all, like in the slightest. I am shit at math, but have a high level understanding of statistical modeling concepts mostly as of a decade ago, and even I knew this. I recall a stats PHD describing models as "stochastic parrots"; nothing more than probabilistic mimicry. It was obviously no different the instant LLM's came on the scene. If only tech journalists bothered to do a superficial amount of research, instead of being spoon fed spin from tech bros with a profit motive...

i think it's because some people have been alleging reasoning is happening or is very close to it

It's written as if they literally expected AI to be self reasoning and not just a mirror of the bullshit that is put into it.

Probably because that's the common expectation due to calling it "AI". We're well past the point of putting the lid back on that can of worms, but we really should have saved that label for... y'know... intelligence, that's artificial. People think we've made an early version of Halo's Cortana or Star Trek's Data, and not just a spellchecker on steroids.

The day we make actual AI is going to be a really confusing one for humanity.

Here's the cycle we've gone through multiple times and are currently in:

AI winter (low research funding) -> incremental scientific advancement -> breakthrough for new capabilities from multiple incremental advancements to the scientific models over time building on each other (expert systems, LLMs, neutral networks, etc) -> engineering creates new tech products/frameworks/services based on new science -> hype for new tech creates sales and economic activity, research funding, subsidies etc -> (for LLMs we're here) people become familiar with new tech capabilities and limitations through use -> hype spending bubble bursts when overspend doesn't keep up with infinite money line goes up or new research breakthroughs -> AI winter -> etc...

I feel like a draft landed on Tim's desk a few weeks ago, explains why they suddenly pulled back on OpenAI funding.

People on the removed superfund birdsite are already saying Apple is missing out on the next revolution.

One time I exposed deep cracks in my calculator's ability to write words with upside down numbers. I only ever managed to write BOOBS and hELLhOLE.

LLMs aren't reasoning. They can do some stuff okay, but they aren't thinking. Maybe if you had hundreds of them with unique training data all voting on proposals you could get something along the lines of a kind of recognition, but at that point you might as well just simulate cortical columns and try to do Jeff Hawkins' idea.

I hope this gets circulated enough to reduce the ridiculous amount of investment and energy waste that the ramping-up of "AI" services has brought. All the companies have just gone way too far off the deep end with this shit that most people don't even want.

People working with these technologies have known this for quite awhile. It's nice of Apple's researchers to formalize it, but nobody is really surprised-- Least of all the companies funnelling traincars of money into the LLM furnace.

This is a most excellent place for technology news and articles.