the_dunk_tank

It's the dunk tank.

This is where you come to post big-brained hot takes by chuds, libs, or even fellow leftists, and tear them to itty-bitty pieces with precision dunkstrikes.

Rule 1: All posts must include links to the subject matter, and no identifying information should be redacted.

Rule 2: If your source is a reactionary website, please use archive.is instead of linking directly.

Rule 3: No sectarianism.

Rule 4: TERF/SWERFs Not Welcome

Rule 5: No ableism of any kind (that includes stuff like libt*rd)

Rule 6: Do not post fellow hexbears.

Rule 7: Do not individually target other instances' admins or moderators.

Rule 8: The subject of a post cannot be low hanging fruit, that is comments/posts made by a private person that have low amount of upvotes/likes/views. Comments/Posts made on other instances that are accessible from hexbear are an exception to this. Posts that do not meet this requirement can be posted to !shitreactionariessay@lemmygrad.ml

Rule 9: if you post ironic rage bait im going to make a personal visit to your house to make sure you never make this mistake again

view the rest of the comments

While we're here, can I get an explanation on that one too? I think I'm having trouble separating the concept of algorithms from the concept of causality in that an algorithm is a set of steps to take one piece of data and turn it into another, and the world is more or less deterministic at the scale of humans. Just with the caveat that neither a complex enough algorithm nor any chaotic system can be predicted analytically.

I think I might understand it better with some examples of things that might look like algorithms but aren't.

An algorithm is:

For the sake of argument, let’s be real generous with the terms “unambiguous”, “sequence”, “goal”, and “recognizable” and say everything is an algorithm if you squint hard enough. It’s still not the end-all-be-all of takes that it’s treated as.

When you create an abstraction, you remove context from a group of things in order to focus on their shared behavior(s). By removing that context, you’re also removing the ability to describe and focus on non-shared behavior(s).So picking and choosing which behavior to focus on is not an arbitrary or objective decision.

If you want to look at everything as an algorithm, you’re losing a ton of context and detail about how the world works. This is a useful tool for us to handle complexity and help our minds tackle giant problems. But people don’t treat it as a tool to focus attention. They treat it as a secret key to unlocking the world’s essence, which is just not valid for most things.

Thanks for the help, but I think I'm still having some trouble understanding what that all means exactly. Could you elaborate on an example where thinking of something as an algorithm results in a clearly and demonstrably worse understanding of it?

Algorithmic thinking is often bad at examining aspects of evolution. Like the fact that crabs, turtles, and trees are all convergent forms that have each evolved multiple times through different paths. What is the unambiguous instruction set to evolve a crab? What initial conditions do you need for it to work? Can we really call the “instruction set” to evolve crabs “prescribed”? Prescribed by whom? Like, there’s a really common mental pattern with evolutionary thinking where we want to sort variations into meaningful and not-meaningful buckets, where this particular aspect of this variation was advantageous, whereas this one is just a fluke. Stuff like that. That’s much closer to algorithmic thinking than the reality where it is a truly random process and the only thing that makes it create coherent results is relative environmental stability over a really long period of time.

I would also guess that algorithmic thinking would fail to catch many aspects of ecological systems, but have thought less about that. It’s not that these subjects can’t gaining anything by looking at them through an algorithmic lens. Some really simple mathematical models of population growth are scarily accurate, actually. But insisting on only seeing them algorithmically will not bring you closer to the essence of these systems either.

Okay, I think I get it now. I see how one could really twist something like your evolution example every which way to make it look like an algorithm. Things like saying the process to crabs is prescribed by the environmental conditions selecting for crab like traits or whatever, but I can see how doing that is so overly broad as to be a useless way to analyze the situation.

One more thing: I don't know enough about algorithms to really say, but isn't it possible for an algorithm to produce wildly varying results from nearly identical inputs? Like how a double pendulum is analytically unpredictable. What's more, could the algorithmic nature of a system be entirely obscured as a result of it being composed of many associated algorithms linked input to output in a net, some of which may even be recursively linked? That looks to me like it could be a source of randomness and ambiguity in an algorithmic system that would be borderline impossible to sus out.

I think what you’re talking about starts to get into definitional differences between different fields, but regardless I think the answer to the underlying questions is “yes”. We can talk about a function’s “purity”, meaning that if a function is pure, it will always produce the same output for the same input and will not change the state of any other aspects of the system it exists within. This concept is different from chaotic systems like you’re discussing, where the “distance” between outputs tends to be large between inputs whose distance is small. So some computer systems have the properties you’re talking about because they’re impure. Others have them because they’re chaotic.

A lot of functions which are both pure and chaotic are used as pseudo-random number generators, meaning they will always produce the same number for a given seed, but are exceedingly difficult to predict. But creating perfectly chaotic systems is very difficult (maybe mathematically impossible? idr) and a lot of the math used in cryptography involves attacking functions by finding ways to reverse them efficiently, as well as finding ways to prevent those attacks.

But yes, all of the things you mentioned can be sources of complexity that can make things chaotic, but that doesn’t necessarily make them nondeterministic. A lot of chaotic systems are sensitive to things like the exact millisecond at which some function runs or other sources of userspace randomness like user input or resource usage. Meanwhile, a good chunk of nondeterministic behavior in software comes from asynchronous race conditions.

also, the word they actually mean is heuristic.

Can you say more things?

when you soften these words, what you're left with is a heuristic - a method that occasionally does what you expect but that's underspecified. it's a decision procedure where the steps aren't totally clear or that sometimes arrives at unexpected results because it fails to capture the underlying model of reality at play.

Oh this is a neat point. Thank you!

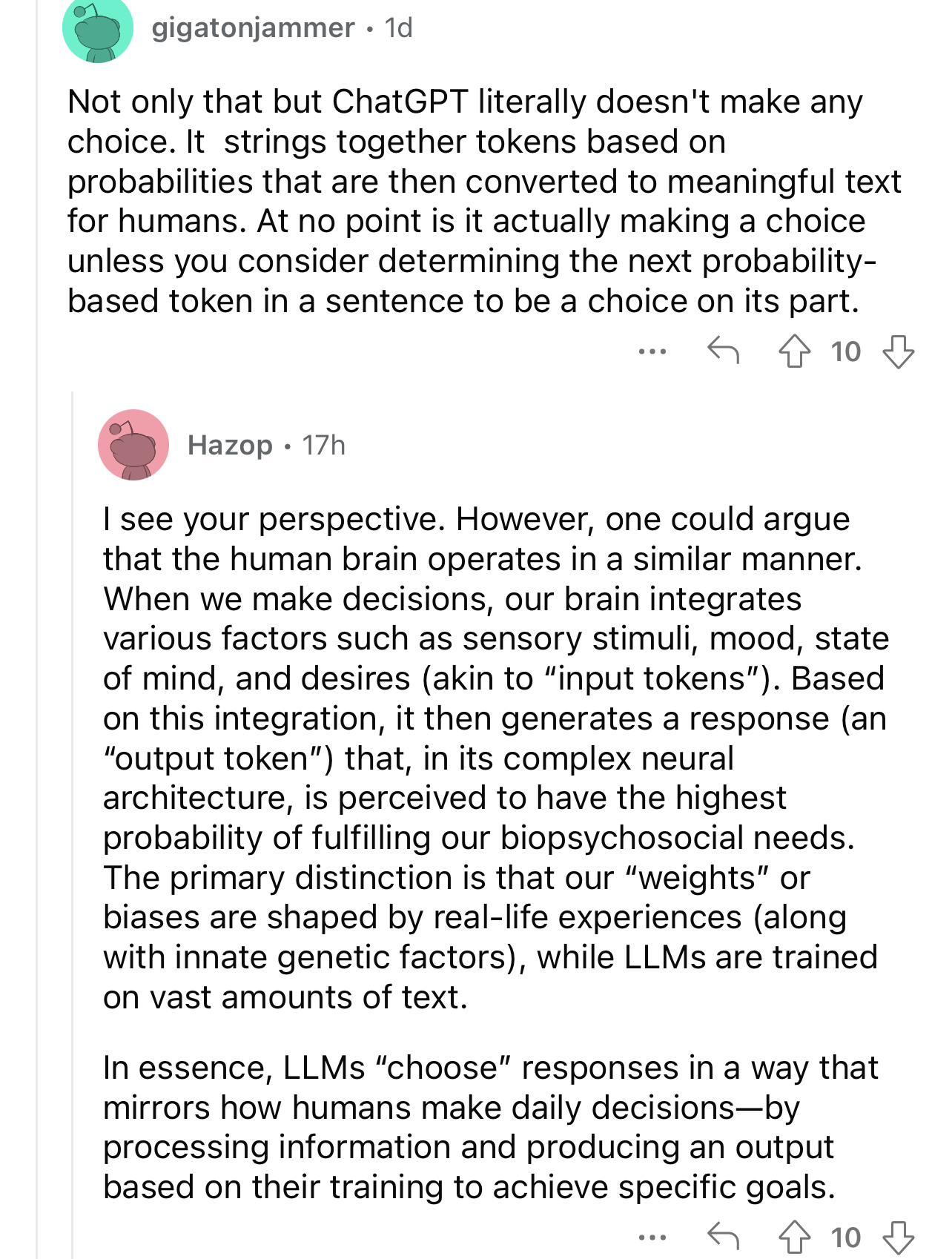

I'm not saying it isn't on some larger scale an impossible association to make as much as I'm saying it's presumptive and arrogant to say "in all fairness, it is" as if that's some indisputable claim.

I could just as easily say the human brain is just a sufficiently complex series of vacuum tubes. Or gears and clockwork. Or wheels. Same reductionist summary attempt, same omission of extraordinary evidence to cover the extraordinary claim.

Don't they also have evidence to suggest the brain pathways work in ways that we just can't understand. I've seen 4th dimension processing thrown around I think to do with smell or something (quantum tunneling needed for smell to work or something physics based I can't remember) to describe how certain process and understanding happen parallel or even before stimulus suggesting the brain can make connections in other ways than just neurons and electric current or something like that.

So like to compare the brain to a computer is completely reductionist and computer touchers love it lol.

I realise this comment is just "here's a load of patchy second hand stuff I've heard misrepresented, any idea what I'm talking about cos I fucking don't lmao" so apologies.

There's enough that's unclear or not yet understood for me to say that claiming "in all fairness" that the human brain is just an algorithm/meat-computer/whatever is lazy and arrogant reductionism, that's for sure.

Someone with a hammer, as the saying goes, thinks everything is a nail.

And a computer toucher wants everything to be neatly fitting into computer programming.

It was a neat analogy for the time.

Kinda like how "I'm a woman trapped inside a man's body" is reductionist for explaining the trans femme experience and transness academically but if you want a quick snappy one liner it can deliver a simple explanation to reduce wild misunderstanding in a layman but anyone who's a redditor will take it too far.

I can see it being comparable.

I take issue with the extraordinary and reductionist claim of that's all it is, prefaced with "in all fairness" as if to will computer toucher feelings into hard irrefutable reality. It's like when someone says "let's be honest" to preface an opinion where it isn't just their "honest" opinion but an implication that it should be everyone's "honest" opinion if they are "honest" as well.

speaking of 4th dimensional processing, https://en.wikipedia.org/wiki/Holonomic_brain_theory is pretty interesting imo

Oh it might have being this I heard getting chatted about 👀