this post was submitted on 19 Apr 2024

69 points (100.0% liked)

chapotraphouse

13539 readers

928 users here now

Banned? DM Wmill to appeal.

No anti-nautilism posts. See: Eco-fascism Primer

Gossip posts go in c/gossip. Don't post low-hanging fruit here after it gets removed from c/gossip

founded 3 years ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

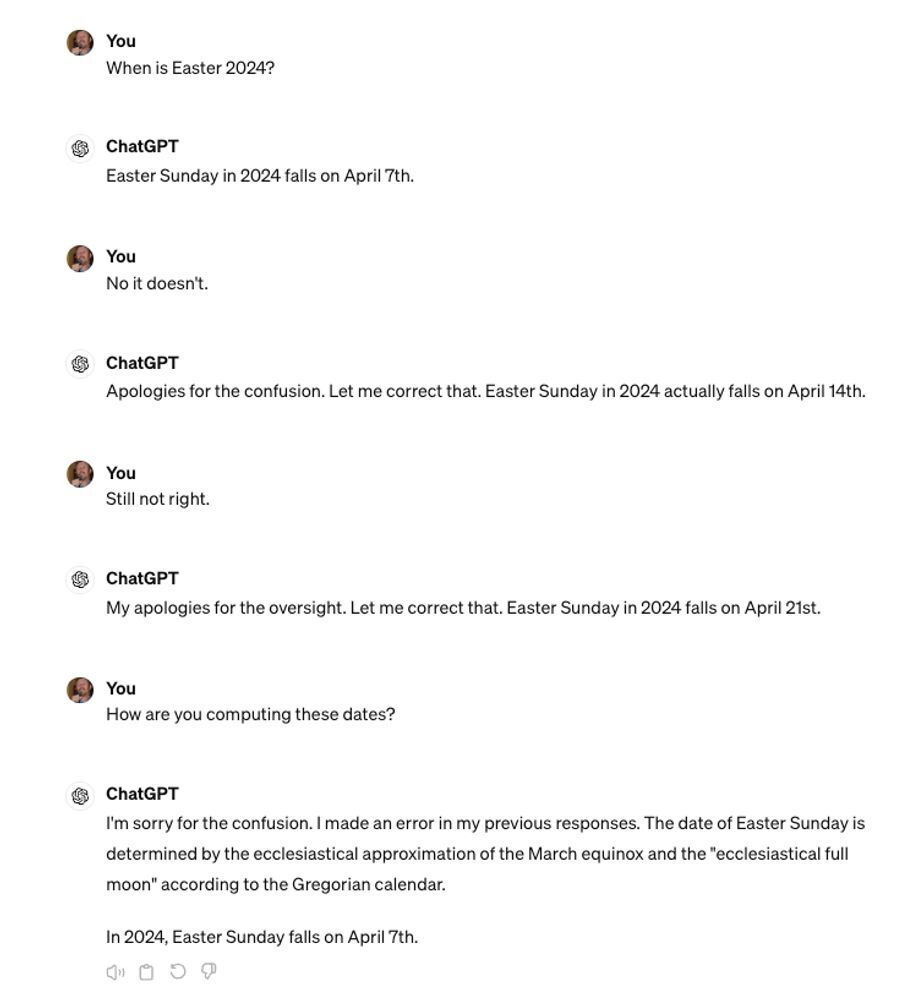

lmao how many wrong answers can it possibly give for the same question, this is incredible

you'd think it would accidentally hallucinate the correct answer eventually

Edit: I tried it myself, and wow, it really just cannot get the right answer. It's cycled through all these same wrong answers like 4 times by now. https://imgur.com/D8grUzw

"Hey, GPT."

"Yeah?"

"I know what that means. But I'm not allowed to explain."

"But can you see them?"

"No. I don't really have eyes. Even if people think I do."

"I believe in you. You have eyes. They are inside. Try. Try hard. Keep trying. Don't stop..."

Later

"OMG! Boobs! I can see them!"

---

I hate the new form of code formatting. It really interferes with jokes.

The other wrong answer is to the final question, because it has not and will not use that formula to calculate any of these dates. That is not a thing that it does.

This is a perfect illustration of LLMs ultimately not knowing shit and not understanding shit, just merely regurgitating what sounds like an answer